How We Untangled the 'Christmas Lights' of a Healthcare Data Migration

Aging infrastructure often holds back teams from delivering faster insights and smoother operations. Ryan Hawkins, a Senior Analytics Engineer at the Children's Hospital of Philadelphia, tackled the daunting task of migrating a 15-year-old on-prem Netezza system to Snowflake.

Ryan Hawkins was part of the team behind one of the most daunting migrations: transitioning a 15-year-old Netezza system to Snowflake. "We’d been on a Netezza system for over 15 years," he shares. "And as you can imagine, anybody that's in the department now didn't work on that at the time. So it's a black box, very limited in what we could do."

From primary key transformations to overhauling naming conventions, Ryan’s team tackled one of the most daunting migrations: a healthcare organization’s legacy data infrastructure to Snowflake. This wasn’t just migration; it was modernization at scale. Over 18 months, Ryan’s team worked methodically, solving unexpected challenges, building tools like their in-house custom validator, and learning to scale as they went.

Untangling Christmas lights and tracing spiritual lineages

At the heart of the challenge was the Netezza system’s tangled dependencies and ambiguous naming conventions. Rebuilding the core layer—facts and dimensions—meant tackling two major issues: reliance on brittle primary and foreign keys and ambiguous column names.

Datafold: You mentioned primary keys were one of the biggest challenges. Why was that such a sticking point?

Ryan: We knew that we were too reliant on the old primary and foreign keys. And, like, once you take it out, it's kind of a string of Christmas lights. It's just a big ball, and you keep trying to untangle and untangle, and it's not till the very last knot that it all comes out into line, and that's exactly how it went.

Pulling on one key would have caused issues in other places. The primary keys were connected to everything. It wasn’t just that they were identifiers—they were the glue holding the entire system together. And when the team started modernizing (e.g. replacing them with hash-based surrogate keys) every change rippled downstream.

Datafold: What did the ripple effect look like in practice?

Ryan: Informatica, which is the ETL software we originally used, had an incremental primary key system. It just kept adding up. Whereas in dbt, we’re using, like, the hash value, surrogate key function that dbt comes with. So the primary keys and the foreign keys were the biggest pain point for us because we had to swap out all the references from the old value to the new value, and the data types were different.

We also wanted to update the naming convention. The old way of doing it was a lot of abbreviations, a lot of ambiguous column names. We wanted to, like, spell out column names, try to make them more distinct and noticeable. Obviously, when you change a column name, you’ve got to make sure you change it all downstream. That was the real tricky part–moving that over and then making sure everything points to the new fact and dim tables and gets off the old Informatica generated ones.

Datafold: That must have been a logistical nightmare. How did you keep track of all the changes?

Alex: What we actually used, I'm ashamed to say, is Microsoft Excel 365 for probably the whole project to keep track of what we're moving over to Snowflake. For example, fin_class would be spelled out to financial_class, or pat_key would become patient_key.

And we didn't have a programmatic way to match these things. We were calling it the 'spiritual lineage,' kind of like how a video game is like the spiritual successor to another video game. We had to track this lineage at the column level so that when someone saw something like date_rec_time, they’d know it’s now date_record_time.

Usually we tried to keep the names very similar, but they're obviously different enough that someone coming in cold wouldn’t recognize them. So we had to track that in Excel as well because there was just no way to link the two tables. From a metadata standpoint, they were completely different tables. This also allowed us to start working on the most important part of the process, migrating the tables, while still keeping up with our documentation. Later on we were able to leverage this data and pivot to a more elegant solution to track progress.

Handling incremental logic with automation

Another significant hurdle came in handling incremental models—tables that updated in small batches rather than full refreshes. These incremental models, while efficient, were tightly coupled to the legacy primary/foreign key system. This created problems whenever data types or keys changed.

Ryan: Originally, we thought we'd make the core layer incremental by default. But in dbt, you have to do a full refresh command anytime there's any significant changes of data or a column.

So we realized at the beginning, especially as we scaled up from four to 16 data engineers, that we needed to make changes to tables that we had already marked as completed. For example, the foreign key might not be generated correctly, or a data type needed to be updated. These required us to do full refreshes on all these tables, and then we had to track down what other tables needed a full refresh because we may have regressed the data inadvertently when we made this change earlier. In our old, dying software system, a lot of these incremental tables were huge. Some of these tables would take hours to reload, and we really didn't want to just hit 'full refresh' every time.

So it was a really tricky situation to track what needed refreshing and to batch those refreshes efficiently.

.avif)

Datafold: So how did you manage to stay on top of all these dependencies?

Ryan: We ended up using GitHub Actions to identify if a pull request (PR) impacted incremental models. GitHub Actions really saved the day for us. You can imagine, if 16 people all pushing PRs to the repo all day, it got overwhelming.

Now, I'm not the best GitHub Actions guru, so it's kind of a magic to me how it works. Eventually, when we had it in its final version and wondered why we didn’t do this sooner. We kept adding steps, automating more and more to take the review process off the reviewers’ plates.

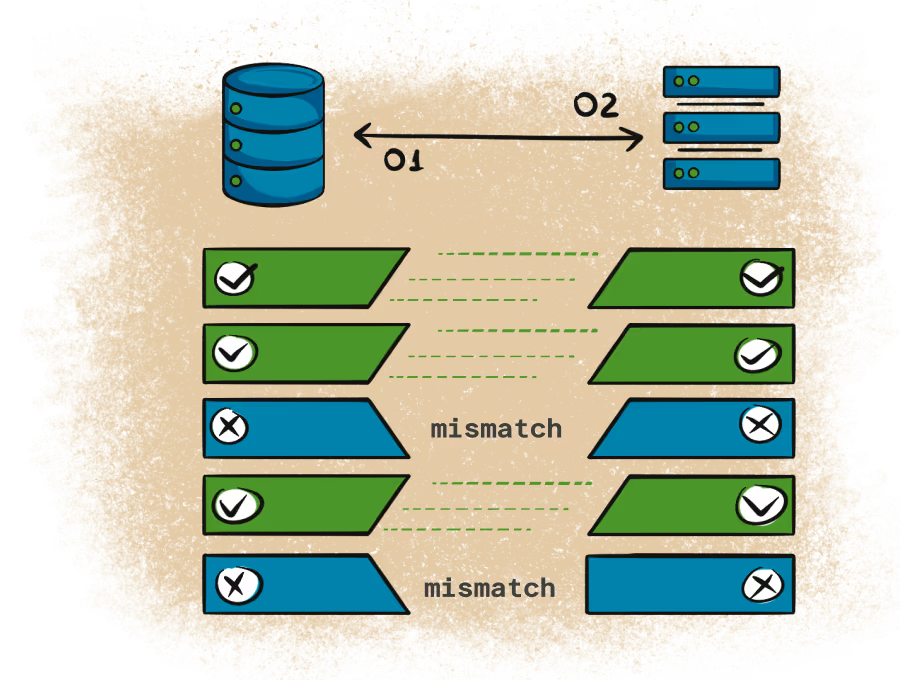

For example, we would store all of our validation results in Snowflake. It had that simply recorded whether it passed–yes or no. The CI would check that table to see if the last run of that validation had passed. If it passed, you’d get a green checkmark in GitHub Actions. If not, it would fail and you would either have to fix it or explain why it’s different but acceptable, and add that explanation to a lookup file that became our audit trail. One example was whitespace handling—if a patient's name had additional spaces in the old system but was corrected in Snowflake, we’d document it. Once it was added to the lookup file, the validation would automatically pass going forward.

Datafold: What else were you able to automate with GitHub Actions?

Ryan: It would parse the dbt manifest and find changes in incremental models to make sure that tables were placed in the correct schemas and that critical models didn’t change to non-critical and vice versa. This was new for us as Netezza didn’t use schemas, so we really weren’t aware of how complicated that would get.

Once we knew these 'gotchas' that would break things at 2 a.m. and alert someone on call, we learnt our lesson to try and prevent them.

Datafold: That’s really cool to hear, I was not expecting to hear that something so simple would be so fundamental to the migration process.

Ryan: Yeah, it really was and it's our philosophy where we're big on creating systems and not relying on individuals. So if we were still pushing bugs every week, we would get a robot to help us not do that.

Datafold: I can see why, especially for a migration that required so much coordination between the 16 people.

Ryan: Yeah, and you can only look at and review so much code in a day before it all starts to blur together.

Validation with extra precision

Ensuring data integrity between Netezza and Snowflake was non-negotiable, particularly with complex primary and foreign key changes. Initially, the team did not have a reliable validation method in place and operated under the assumption that the thousands of tables moved to the data lake were mostly correct.

Then, a plucky data engineer decided to build their own tool.

Datafold: How did this work?

Ryan: When we moved over to Snowflake, we built an in-house validation tool, inspired by Datafold and other industry tools. For smaller tables, it does a full validation, row-by-row. For larger tables, it performs an aggregated validation.

This came way later than we would have liked to have it. But we were trying to create it as fast as possible. Originally, it was used in the data lake, which was necessary, because we just moved over 1000s of tables and assumed they were mostly right but we didn't know for sure. It was later down the line, after we had the tool up and running, that we were able to find issues that we weren't able to find previously because we couldn't validate across two separate database environments.

Built with Python, Airflow, and GitHub, the custom validator became an essential part of the migration process, offering a level of precision and confidence that manual checks simply couldn’t achieve. Results were stored in Snowflake, creating an audit trail for discrepancies.

Datafold: Was there a reason why the team decided to create your own in-house testing tool? What was the process like?

Ryan: I think we thought we could design it the way we wanted to and, obviously, not have to pay for it.

It’s been an iterative process. It started with the data lake, and then it moved on to validating the core and reporting layers. And actually, after that, we're going to use it for dashboards and other stuff.

We're constantly iterating on the needs for it, and we felt that we had enough resources to do this and keep it maintained.

Scale earlier

Initially, Ryan’s team started small—just a handful of engineers testing workflows and building out Snowflake. But as they approached deadlines, the team realized they could have scaled sooner.

Datafold: What is one piece of advice you would give to someone embarking on their first data migration?

Ryan: Start small and figure out what works and what doesn't. And determine how you're going to scale, and scale early. I feel like we did not scale early enough, and that kind of put us behind the eight ball.

Figure out how best to scale with the knowledge that things will still come up that you need to fix, or issues will still need to be looked into. There is no perfect time to scale. It's always going to be kind of like a construction site up until the end. Or it's going to get behind.

Datafold: Right, you’re going to hit the problems anyway, so you might as well learn about it now.

Ryan: Exactly. Because a lot of problems didn't come out until we got 50 eyes on it. When it was just 16 of us, we could only do so much and we thought we were doing well. We picked a time to scale out to the data analysts, once we felt like we had the process and directions ironed out, but picking a sooner date would have helped with the project timelines. That’s true for all projects and easier said than done.

Granted, in a perfect world, you don't have other stakeholders with competing responsibilities and stuff like that. The data analyst teams were more front-facing with the stakeholders, while our only task as data engineers was the migration. So there's always the political side to that. But I feel like scaling earlier would only have helped us.

Datafold: Okay, thanks. Anything else?

Ryan: Automate as much as you can. I think that goes without saying for any type of project.

Datafold: I think that’s the dream of the industry when it comes to data migrations, they’re the last holdout for automation with all their edge cases.

Ryan: Exactly, yeah. It’s daunting, and it’s tough to decide if it’s worth our time to figure out an automated solution or just start working on table migration. We had a few instances of that where we just didn't know if we wanted to pull off two data engineers from migrating tables for three weeks to build a solution that may or may not make a difference. But obviously, the custom validator was a great idea that paid off.

The data migration stack: Results and reflections

Eighteen months after the project began, the team successfully migrated the healthcare system’s data to Snowflake. Mission-critical workloads now run faster, smoother, and with fewer errors.

Thoughtful automation, leadership, and tooling ensured the process remained efficient—even enjoyable:

The benefits of the migration are already paying off:

- Reliable operations: Dashboards and analytics assets run on time with fewer delays.

- Reusable tools: The custom validation tool provides ongoing validation for new data assets.

- Clearer data: Overhauled naming conventions make tables easier to navigate and use.

Tools that made it happen

- dbt + Airflow: Orchestrated the transformation logic and automated table migrations.

- GitHub Actions: Automated checks for incremental models, preventing regressions and manual bottlenecks.

- The in-house validation tool: Validated data row-by-row or through aggregates, ensuring accuracy across environments.

- Jira: Managed 1,000s of tickets to track migration progress and dependencies, avoiding duplicate efforts.

- Excel: Surprisingly essential for mapping old-to-new naming conventions and tracking the “spiritual lineage” of data.

The biggest surprise: Excel as an MVP candidate

In a migration packed with automation and cutting-edge tools, the most unexpected MVP candidate was Excel. The massive spreadsheet became the backbone for managing dependencies, mapping lineage, and tracking renaming conventions.

It wasn’t sophisticated, but it didn’t need to be. Excel worked because it provided a simple, reliable way to link the old system’s tangled structures to the modernized Snowflake environment. For all the advanced tools involved, sometimes the most basic solution is the one that keeps the project moving.

Disclosure: The information provided in this technical blog represents Ryan's personal experiences and opinions. It does not represent views of Children's Hospital of Philadelphia (CHOP).