Refactoring for the Future

Fabio Biondolillo, a Senior Analytics Engineer at Brooklyn Data, reflects on his first major data migration—navigating undocumented systems, demystifying black boxes, and balancing phased improvements with future goals.

For many engineers, data migration projects are often a trial by fire—a first-time challenge that introduces them to the complexities of legacy systems and the art of balancing short-term fixes with long-term strategy. When Fabio Biondolillo embarked on his first major migration as a Senior Analytics Engineer at Brooklyn Data Company, he was thrust into a world of undocumented processes and black-box systems.

"We’re giving ourselves a lot of cushion because there are so many unknowns," Fabio explains. "It’s a black box to them, but also a bit of a black box to us. There’s no documentation, and the people who wrote the code aren’t there anymore. There are so many stored procedures—like seven stored procedures populating one table—and it takes time to really digest what’s happening nightly."

Despite the challenges, Fabio and his team tackled the migration with a phased approach that prioritized making sense of legacy workflows while designing for the future. In this conversation, Fabio shares the lessons learned from turning undocumented systems into transparent processes, balancing immediate refactoring needs with long-term goals, and leveraging the unique insights of external consultants to tackle deeply entrenched workflows.

Navigating greenfield development with legacy migration

The project looked simple: transform a legacy system into a future-ready solution. But this wasn’t your typical migration, as Fabio quickly realized. While traditional migrations focus on transferring data as is, this project demanded a hybrid approach—combining elements of greenfield development with the constraints of migrating existing processes and outputs. He explains how they balanced refactoring, backend overhauls, and maintaining the integrity of downstream outputs to deliver both immediate and long-term value.

Datafold: How are you figuring out the workflow or process for this migration? Is there some sort of playbook that Brooklyn Data Company has rolled out, or does it vary between consultants?

.avif)

Fabio: I’d say this is a pretty unique project. And I say that as someone working on my first project here, but from what I’ve heard, it’s quite unique in that the clients really don’t have much of a process at all currently. They’re doing everything pretty manually and don’t have much set up.

So, while it is a migration, it’s also kind of like a greenfield project in some ways. Most of the playbook we’re following is up to us to create a vision for what we think it should look like. Since their main source is still an Excel sheet, we’re trying to work with them to ensure that downstream stakeholders don’t see too much of a difference in their day-to-day operations until they absolutely have to.

We’re taking a very phased approach. We’re keeping what’s currently in place as is for now, and then we’ll revisit the overall structure and planning later. I don’t know if that fully answers your question, but we’re working closely with stakeholders to figure out what works best for everyone.

The dual nature of the project—balancing the need for immediate results with long-term innovation—meant Fabio and his team had to make critical decisions early. A top priority was ensuring that end users experienced no disruptions during the transition while overhauling the backend for future scalability.

Datafold: I was going to ask this later on but did you go the lift-and-shift first route, to refactor and optimize later?

Fabio: We did some of the refactoring in the first iteration. The idea is that anyone who uses the product from a project manager perspective shouldn’t see much of a change in their day-to-day. They should go to the same dashboard, they should be able to plan the same way. But the way it gets from point A to point B will be drastically different–we’re going to refactor in dbt–but no one outside of the data team will see what we’re doing.

This required significant effort to understand and replicate the legacy system’s outputs without simply duplicating its inefficiencies. Fabio’s team focused on designing an ingestion layer that could support long-term growth while ensuring that downstream reports remained intact.

Datafold: What were you refactoring?

Fabio: Their Power BI dashboards needed a lot of optimization. They’re using a lot of measures where they don’t necessarily need to—like nested measures that call other measures. Ideally, we’re going to try to take some of that and create a semantic layer in dbt or do something in dbt so that those dashboards load faster.

We worked on optimizing the ingestion layer and made sure their warehouse is malleable for them. We wanted their data people to be able to add new columns or metrics where they want to and understand exactly how data gets from point A to point B.

Before, they knew that it came into SQL Server. After that, it’s a bit of a black box for them before it gets to Power BI. They didn’t know how to fix or work with what’s in the middle. So one of the first things we did was work on what’s happening in the middle.

Datafold: That makes sense. So was the refactoring in dbt and the optimization seen as a distinct phase of the migration, or was it a separate project after the migration was done?

Fabio: It’s one and the same. In the next phase, we revisited the actual data model. Like, do we need this dimension? Can it be like an OBT-style model? Do these fact tables look the way we would want them to?

Initially, we didn’t want to make any changes to the Power BI dashboard, so everything needed to look the same in that final presentation layer. We didn’t have the time or resources to change things in Power BI—that will come later. Only when we have that availability to fix Power BI can we also fix the fact table that feeds Power BI.

When a fresh perspective matters in migrations

Outsourcing a migration project to consultants is often met with skepticism. Why not keep the work in-house or rely on specialized tools? Fabio explains how a fresh perspective can untangle even the most deeply rooted processes while acknowledging the challenges that come with it.

.avif)

Datafold: There are strong views on whether these projects should be handled in-house, outsourced, or managed with a specialized tool—if you can even find one. Could you comment on, at a meta level, why consulting is the better route?

Fabio: Yeah, I guess I can. I’ll answer that and also play devil’s advocate to explore why people might argue against it. I think this project is a really good example for the consulting model because there are processes so deeply entrenched in how they do this work that people are really hesitant to change them.

There’s a lot of that "if it’s not broken, don’t fix it" mentality in-house. It’s hard to see why the process doesn’t need to be so complex from the inside, whereas we’re seeing it from a completely fresh perspective, we can immediately say, "this doesn’t need to be like this at all," which is harder to do when you’ve been band-aiding a solution for a long time and you know exactly how it works. To you, it makes sense. But for anyone new coming into the project—especially if you’re continuing to handle it in-house—anyone who wants to know what you’re doing or fix something, they can’t do it.

Having us be the first ones to ask, "What are you doing here?" and trying to understand the process is super helpful. It allows for a less biased approach to figuring out why something doesn’t need to work the way it currently does. But there’s also a lot of internal buy-in where they acknowledge that something isn’t necessarily broken, but it’s pretty janky, and they need help fixing it because they don’t have the in-house knowledge to do so.

On the other hand, because these processes are so deeply entrenched and their business workflows are specific to their needs, there’s usually not much documentation. It takes a lot of time for us to understand it. There’s a lot of upfront work that needs effort for you to become productive and that takes some investment, so I can see why people might hesitate about bringing in consultants, thinking, "It’ll take them forever to understand what we’re doing."

So until you understand why certain things are the way they are—without dismissing their presence in the first place—it’s a challenge. But in this case, it’s been really great. We’ve been working with stakeholders who are helpful and admit when they think something isn’t working right. They aren’t defensive about changing certain strategies. It’s been great.

Approaching QA in migrations

Ensuring data accuracy and consistency is a critical part of any migration, and QA plays a pivotal role in this process. Fabio walks through the early testing strategies his team is using, from gut checks to exploring more advanced tools.

Datafold: Circling back to the technical specifics of the migration itself, what sort of testing strategies are you using for QA?

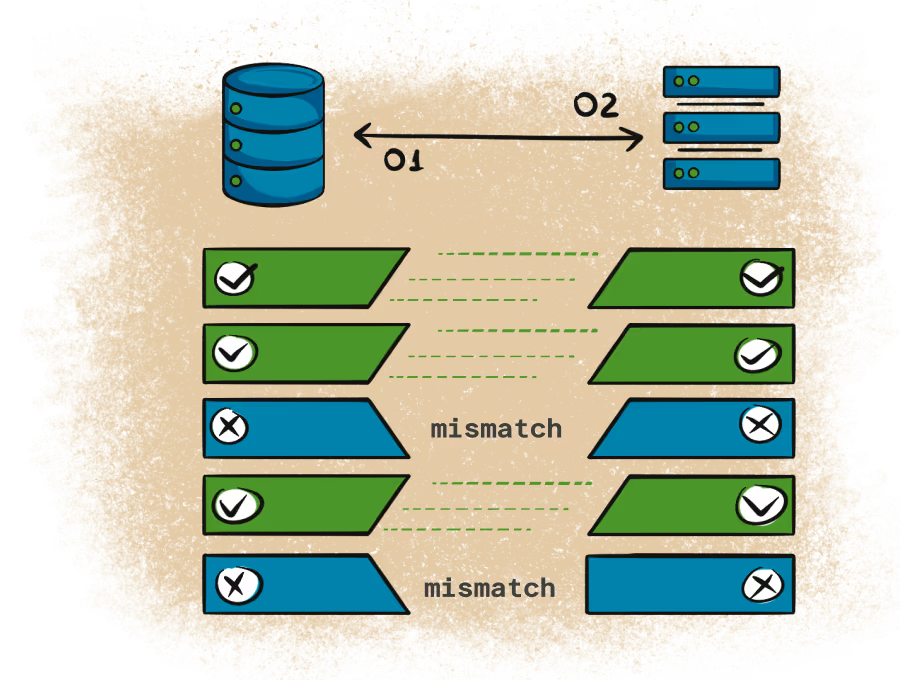

Fabio: We’re still so early in the modularization and ingestion phase that we’re just starting QA. Right now, what we’re doing is gut-checking and running some aggregations on both levels, picking specific examples, and doing an eyeball check.

The good thing about this specific migration is that the data volume isn’t very high. There are only about 25 tables we need to migrate. The hard part is understanding their logic for DML and DDL. That’s the biggest piece. Once we get the tables materialized, I think the QA process will be straightforward because the data volume is relatively low, and we won’t need to employ state-of-the-art QA tools.

Datafold: Gotcha. In terms of tooling or platforms, you’ve mentioned a few already. Is there anything else you’re utilizing that’s migration-specific?

Fabio: One thing to mention is that we’re in the middle of their migration from SAP ByDesign to SAP S4, and the SAP setup is used for their actuals in our work stream. They currently have a connection between SAP ByDesign and SQL Server for their bronze layer, so to speak. During this migration, that bronze layer will transition to SAP S4, and the connection between SQL Server and SAP ByDesign will cease to exist.

What we’re doing now is connecting directly to SQL Server, so we don’t need to set up an SAP ByDesign connection, which is going to go away. We’re using Databricks notebooks to ingest data from SQL Server, storing it as Parquet files in S3, building a silver layer that converts those Parquet files into Delta Tables in Databricks, and then SAP S4 will come online with its own new ingestion pipeline. But we will be doing the same thing by ingesting into an S3 bucket from SAP S4 and running it through the same silver layer.

So, right now, it’s Databricks notebooks for ingestion, SQL Server as the source (which will become SAP S4), dbt for transformations, and Power BI for analysis.