Overcoming Legacy Data Migration Challenges with Confidence

Legacy data migration is full of risks. Learn how to overcome challenges like data integrity issues, schema mismatches, and downtime with the right strategies.

Moving legacy data to a modern system isn’t just a technical upgrade—it’s about upgrading how your business runs. Done right, migration improves performance, scales with your needs, and keeps historical data accessible without headaches. But it’s not as simple as hitting "copy and paste." Without a solid plan, you risk broken reports, missing records, and compliance issues that can be a nightmare to fix.

In other words, a poorly-executed data migration will keep you up at night for weeks, if not months.

Older systems weren’t built for today’s data demands. Data types don’t match 1-1, documentation is incomplete, and small differences snowball into costly downstream issues. A misplaced decimal throws off financial calculations. A missing timestamp skews customer history. Manual checks only catch so much, and by the time errors surface, the damage is already done. There are plenty of stories if you want to read about botched data migrations and their business impact.

Automation, validation, and real-time comparisons eliminate guesswork and keep data accurate at scale. Instead of relying on outdated methods, you can move forward with confidence, knowing your data arrives intact.

What is legacy data migration?

Moving data from outdated, typically on-premise systems to modern, cloud-based platforms should be simple, but legacy databases weren’t built with migration in mind. Inconsistent formats, missing documentation, and hard-coded business logic turn what seems like a straightforward transfer into a high-risk process. Without a solid plan, data can go missing, become inconsistent, or fail to function as expected in its new environment.

To avoid these pitfalls, you need to take control of every stage:

- Take inventory of where you’re starting: You have to know what you’re migrating before you get started. Identify all your data sources, including databases, file systems, third-party systems, and obscure storage like tape archives or siloed spreadsheets. Assess their data volume, quality, usage and not duplicates, obsolete records, and any unstructured messes that could clog the migration.

- Transferring data from legacy systems: Older databases, mainframes, and custom-built applications weren’t built for today’s cloud environments. Before making the move, restructure outdated formats and clean up inconsistencies to avoid compatibility issues.

- Adapting schemas, formats, and metadata: Legacy databases often use outdated data types, proprietary structures, and non-standard field names that don’t map cleanly to modern systems. Standardize formats and align schemas upfront to prevent data mismatches and missing values.

- Handling dependencies and workflows: Hard-coded logic and interconnected workflows keep legacy systems running, but they don’t always translate to new environments. Untangling dependencies manually can be tedious and error-prone, making automation essential for a smooth transition.

The reality of legacy migrations is messier than it looks. You might face decades-old data with no clear owner or transformation logic so tangled it’s baked into the system’s DNA. Bandwidth constraints can choke transfers, and unsupported hardware might force manual workarounds—like coaxing a dying server to limp through one last dump.

Why moving legacy data is harder than it looks

Migrating data from legacy systems to modern platforms involves more than just transferring information; it requires translating the underlying code and business logic that process this data. While tools exist to move data efficiently, converting legacy code—such as stored procedures, SQL scripts, and ETL mappings—into formats compatible with new systems is a complex and time-consuming task. It often involves untangling extensive codebases written in outdated languages or platforms, which modern ETL tools may not support.

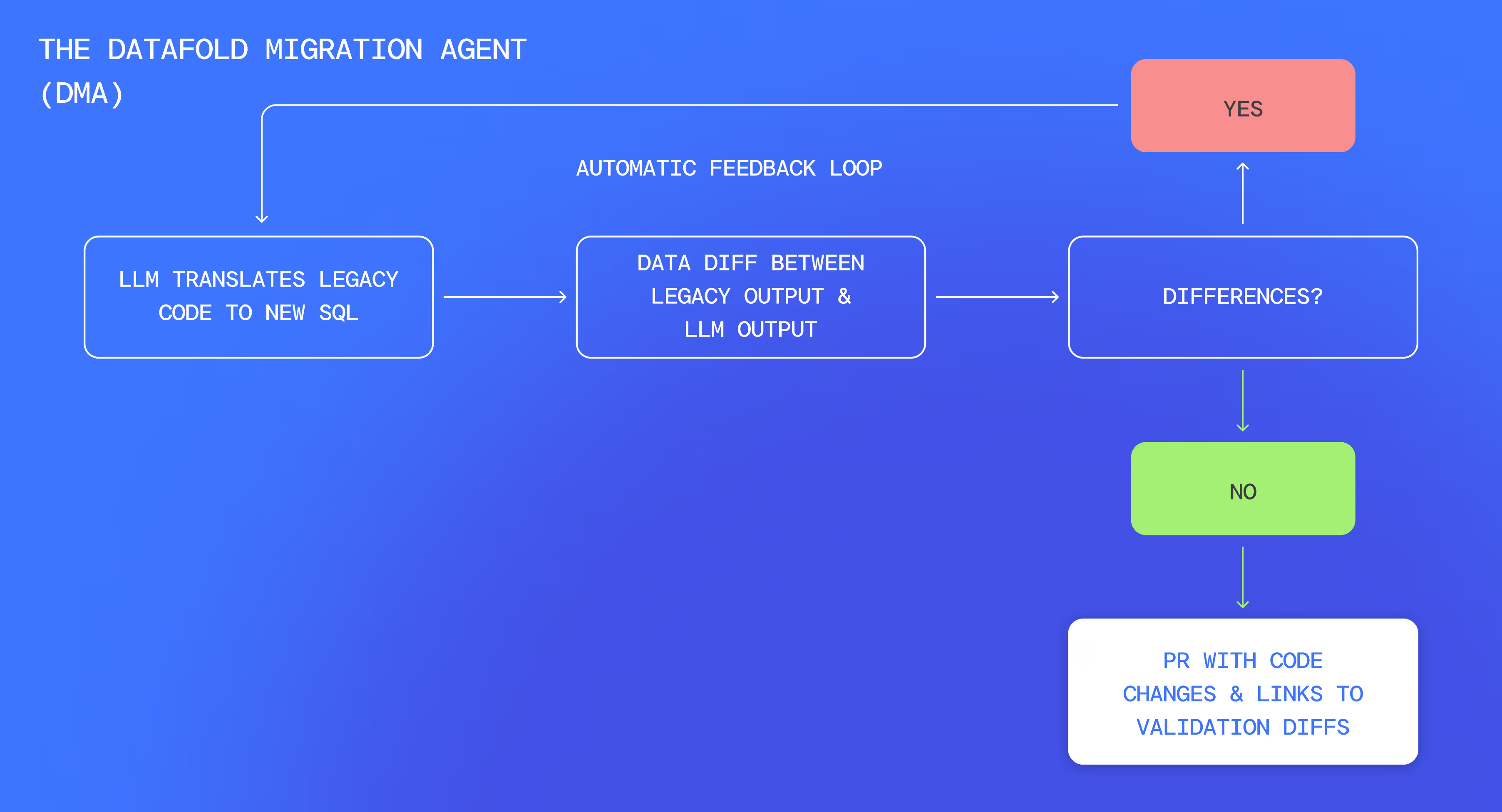

Datafold's Data Migration Agent (DMA) takes the pain out of migrating legacy code by automating both translation and validation. AI-driven technology converts legacy SQL into modern dialects or data transformation frameworks like dbt. Once translated, DMA runs comparisons between the old and new systems, fine-tuning the code until every output matches. Teams no longer have to manually debug mismatches or rewrite queries from scratch—DMA handles the heavy lifting and speeds up migration .

Automating these steps removes the biggest roadblocks in a migration. Organizations avoid the risks of broken logic and inconsistent data, while engineers reclaim time for higher-impact work. A smooth transition from legacy systems to modern platforms allows the code powering that data to function correctly in its new environment.

The 4 biggest migration mistakes and how to avoid them

Rushing the process or skipping validation makes migration harder than it needs to be. The best way to avoid costly mistakes is to catch problems early, test every step, and use the right tools to protect your data.

#1. Messy data creates bigger problems than you think

Migrating data usually isn’t a clean handoff. In fact, sometimes it’s dragging a junkyard into a new house. Legacy systems can be riddled with sloppy formats, ghost records, and duplicates that look harmless until they detonate. Imagine financial data where one system rounds cents to two decimals and another truncates them—pennies vanish, and suddenly your balance sheet’s off by $10,000 across a million rows. Or customer tables with “John Smith” listed 15 times because typos like “Jhon” or “Smith Jr.” never got deduped, fouling up your CRM on day one.

These aren’t “whoops” moments you fix with a quick sweep. Post-migration, you’re stuck reverse-engineering cryptic entries—like a product code that’s just “X” because the original spec died in 1995—or manually reconciling ledgers while the CFO breathes down your neck.

Healthy Directions ran into similar challenges when migrating from SQL Server to Snowflake. They faced challenges validating data on both sides, especially with complex stored procedures and SSIS packages. Using Datafold’s cross-database data diffs, they pinpointed data issues early, an approach that saved them several weeks of manual validation time.

#2. Legacy databases don’t always play nice with modern systems

Older databases weren’t built with cloud migrations in mind. They rely on denormalized schemas, unique indexing structures, and proprietary data formats that don’t translate cleanly to cloud platforms. If you migrate data as-is, queries fail, key fields fill with nulls, and previously seamless applications start throwing errors.

Eventbrite faced this issue when migrating from a legacy Hive/Spark/Presto stack to Snowflake. With 300+ models to rebuild, they had to make sure all new transformations matched the original ones. The company automated the validation process using Datafold’s Data Diff, which reduced errors and accelerated migration.

#3. Slow migrations frustrate teams and disrupt business

Migration delays are a gut punch—teams grind their teeth while ops teeter on the edge. Clunky pipelines chug along like molasses, bogging down queries, timing out APIs, and stranding you in limbo between a creaky old system and a half-baked new one. What should’ve been a clean leap turns into a slog of lag, rage-quits, and endless “why isn’t this done yet?” firefights.

But a slow migration isn’t inevitable. Fine-tuning your pipelines with incremental data transfers and stress-testing performance helps you catch bottlenecks before they slow things to a crawl. A little upfront testing goes a long way—you’ll keep everything running smoothly and avoid unnecessary downtime.

Faire prioritized speed and accuracy when migrating from Redshift to Snowflake. To keep operations running smoothly, they used Datafold’s Data Diff to validate data integrity throughout the process. This approach accelerated their migration by six months while maintaining accuracy and consistency at scale.

#4. Migration problems stay hidden until they cost you

What you don’t catch during migration can and will come back to haunt you. Many teams assume that if the migration completes without errors, everything must be fine. But without deep testing, hidden issues like truncated records, schema mismatches, and misaligned foreign keys go unnoticed—until they break critical operations when it’s too late to fix them easily.

These problems don’t have to catch you off guard. Continuous monitoring and automated test comparisons between your source and destination data flag issues before they turn into full-scale disasters. Row-by-row validation and data diffing tools ensure nothing slips through the cracks, keeping your migration clean, accurate, and problem-free.

How automated data validation helps overcome challenges

Migrating legacy data without automated validation is a gamble. Small inconsistencies slip through, only to cause major failures later. Yet many teams still rely on manual SQL queries, spot checks, and visual inspections—approaches that are slow, unreliable, and impossible to scale. Datafold’s Data Migration Agent (DMA) eliminates this guesswork by automating code conversion and running automated, row-level comparisons across databases, flagging discrepancies before they impact production.

How automated validation protects your data

Automated validation takes the guesswork out of the process. Datafold’s cross-database data diffing scans entire datasets in real time, catching what manual checks miss:

- Schema inconsistencies: Structural differences between legacy and modern databases can cause unexpected failures. Automated validation flags issues like missing constraints, incompatible data types, or renamed columns before they create problems.

- Missing rows: Data gaps can lead to incomplete reporting and operational disruptions. Automated checks identify records that didn’t make it to the destination system, ensuring every row transfers successfully.

- Mismatched values: Subtle discrepancies—like rounding differences or misformatted timestamps—can throw off analytics and financial calculations. Automated validation highlights these inconsistencies before they cause reporting errors.

Avoid the "square peg, round hole" problem in your data migration

Legacy databases and modern cloud platforms don’t always get along. Data types don’t match, indexing works differently, and even simple formatting rules can throw things off. If you don’t catch these mismatches early, things start breaking. Constraints go missing, relationships fall apart, and records don’t line up the way they should. Before you know it, reports are wrong, analytics are unreliable, and dashboards stop making sense.

Schema-related issues that can break a migration include:

- Non-deterministic queries: SQL operations like unordered concatenation return different results in the new system, making validation unreliable.

- Conflicting data types: Numeric fields, timestamps, and text values get interpreted differently across platforms, leading to incorrect values and conversion errors.

- Indexing differences: Legacy databases rely on indexing structures that don’t exist in modern cloud platforms, which slows down queries and creates performance bottlenecks.

- Collation mismatches: Differences in case sensitivity, special characters, or sorting rules can cause errors that didn’t exist in your legacy system.

These inconsistencies make it nearly impossible to confirm that migrated data actually functions the same way as the original. Without a way to catch these issues early, teams often find themselves troubleshooting long after the migration is complete.

A structured approach keeps these issues from turning into major roadblocks. By aligning datasets before migration, teams can catch mismatched values, schema drift, and missing rows before they cause downstream failures. Datafold’s DMA Source Aligner helps by creating a stable, consistent view of source data before migration. Its cross-database data diffing then flags discrepancies like dropped columns or incorrect values early in the process. With the right validation in place, teams can migrate with confidence.

Catching the "silent errors" that can wreck your migration

Not every migration error comes with a flashing red alert. Some slip through unnoticed—just subtle enough to evade basic validation but serious enough to break reports, integrations, and compliance requirements. These errors don’t show up until it’s too late, creating data integrity issues that take months to untangle.

The most common silent errors that go undetected are:

- Missing rows: Certain records never make it to the new system, leaving datasets incomplete. Reports, compliance audits, and downstream analytics all suffer as a result.

- Duplicate records: Extra copies inflate calculations, distorting financial reports, customer insights, and reconciliation efforts.

- Rounding inconsistencies: Numbers round differently in the target system, throwing off revenue, inventory, and performance metrics.

- Schema mismatches: Column orders shift, constraints break, or data types don’t align, leading to misaligned records and failed queries.

These errors are often hard to catch. Everything can look fine on the surface—row counts match, schemas appear identical, and nothing raises immediate red flags. But then finance runs a reconciliation report and spots discrepancies in total revenue. The culprit is a rounding inconsistency in the new system, shifting balance calculations just enough to create inaccurate financial statements.

With a tool like Datafold’s DMA, these silent errors don’t slip through. It runs scalable, row-by-row comparisons across your source and destination databases, using advanced data diffing to flag missing records, duplicated entries, unexpected value shifts, and schema inconsistencies. Instead of guesswork, you get clear, verifiable proof that every number, record, and value transfers exactly as intended.

Your migration pipeline shouldn’t be a black box

Migrations shouldn’t feel like a leap of faith—but too often, they do. Data moves from one system to another, and teams hope everything transfers correctly. Then reality hits. Dashboards break, reports don’t match, and suddenly, entire datasets are missing. Now, you’re stuck digging through logs, comparing records by hand, and spending hours troubleshooting a problem that should’ve been caught earlier.

There’s no need to accept this scenario as inevitable. The Datafold DMA plugs directly into your ETL workflows, running automated data diffing and schema validation in real time. Instead of scrambling to fix issues after the fact, you’ll see exactly what’s happening at every stage, keeping your migration controlled, transparent, and error-free.

Why Datafold’s DMA makes legacy migrations less risky

Migrating legacy data feels like walking a tightrope—one wrong move and you’re dealing with broken reports and hours of troubleshooting. Manual verification methods don’t scale, and even a tiny inconsistency can snowball into major downstream problems. Without visibility into how data changes across systems, teams are left guessing long after cutover.

Our DMA takes the risk out of the process. Instead of relying on manual SQL checks or last-minute audits, DMA automates validation at every stage. It compares source and target datasets in real time, catching mismatched records, schema drift, and missing values before they cause trouble.

Use DMA for database data diffing, real-time observability, and seamless ETL integration—so you’re never left guessing. For a smoother, error-free migration, see how Datafold’s DMA keeps your data accurate from day one—request a demo.

.avif)