The Anatomy of a Data Migration

Discover the essential phases of data migration, from planning to validation, and learn how to overcome challenges for a seamless, successful migration.

The Anatomy of a Data Migration is not meant to give you a 101 on human anatomy; it’s meant to help you understand how the different migration phases connect to each other and how to keep each part “healthy” for a successful, timely migration.

Use this guide to learn more about the beautiful complexities of data migration challenges and solutions (and the human body!), and how to succeed at each step of a migration journey.

The Brain: Planning

Every successful migration starts with strong project management: identifying goals, auditing legacy systems, determining migration strategies, and setting a realistic timeline. And planning is the brain behind the operation—strategic, analytical, and always thinking ahead. Like your brain coordinating every function of your body, a well-crafted migration plan anticipates challenges and ensures every step aligns with your goals.

Identify goals

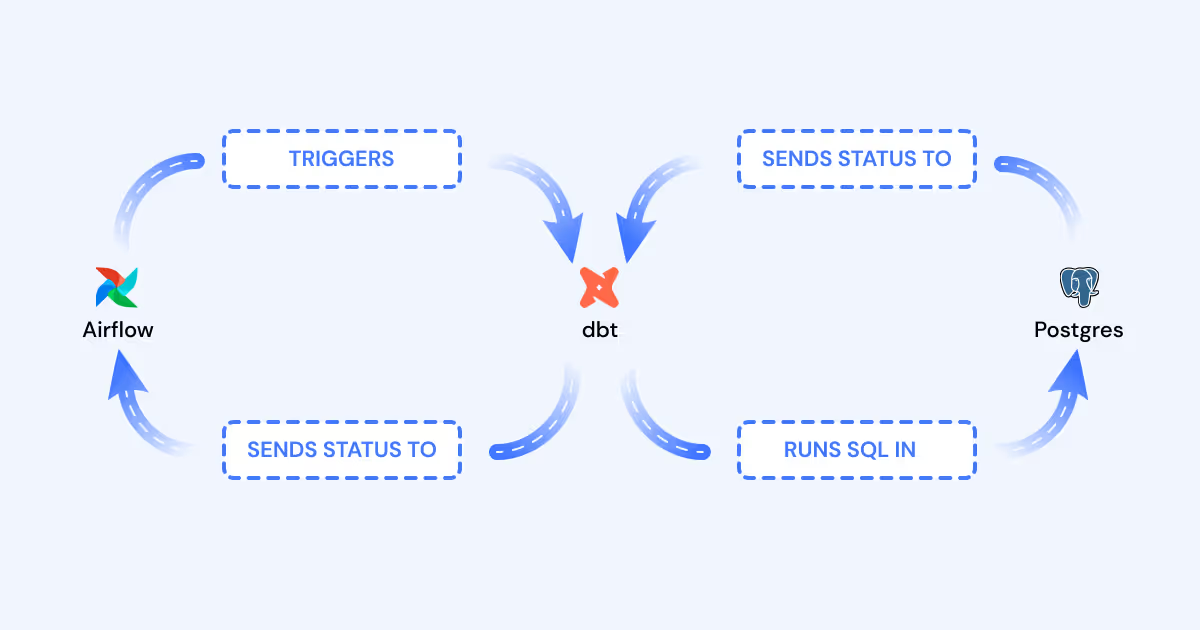

In this part of planning, data teams need to determine the scope of the migration (i.e., is the database being migrated? Is the business logic processes also being modernized to something like dbt?). At this phase, if it’s not already been determined by higher-ups, decisions around the new technologies (e.g., data warehouse vs data lake, dbt vs stored procedures) will be made.

Migration strategy

One of the most important decisions an organization needs to make before a migration is the data migration strategy: to lift-and-shift or refactor-as-you-go. We’ve written extensively on why we think lift-and-shift is the most time and cost-efficient way to migrate, but the decision made here will have substantial impact on the timeline and people involved in the migration.

Audit legacy systems

For teams migrating hundreds or thousands of tables, there’s likely some technical debt they can get rid of completely, or worry about migrating later. This stage of planning is important in determining the overall scope of the migration, and can help determine if a lift-and-shift or refactor-as-you go strategy is more appropriate for your team.

Determining timelines

Lastly, creating a timeline that the data team and business stakeholders can be held accountable to is vital. Data teams and business units should align on this well before the migration work actually starts, and the data team should maintain transparency and communication if timelines dramatically shift in-migration.

All of this planning is particularly critical for managing the dual-system phase of migrations: Running legacy and new systems in parallel is inevitable. Plan how to synchronize data between systems, define clear roles for each, and establish a timeline to transition fully to the new platform. Mismanaging this phase can lead to data drift, stakeholder confusion, and prolonged inefficiencies.

The Lungs: Code conversion

The lungs extract oxygen from inhaled air, helping pass oxygen through the body and exhaling carbon dioxide released from the tissues. To us, the conversion of air into oxygen is parallel to the vital period of code conversion during a data migration.

Legacy SQL scripts, stored procedures, and business logic in GUI systems (e.g., Alteryx, Informatic) must be translated into the SQL of the new platform or transformation workflow (e.g., dbt, SQLMesh).

- Stored procedures/custom SQL to more modular frameworks dbt: In a migration like this, the existing SQL code needs to be translated to the new schema formatting/naming conventions in the new warehouse, SQL needs to be converted into the new SQL dialect, and, if moving to a new tool like dbt, new code paradigms need to be adopted.

- GUI logic (e.g., Matillion, Informatic, Alteryx) to stored procedures/SQL/dbt: For teams migrating off a GUI-based system to storing business logic in code, the business logic embedded in the GUI must be extracted (typically as XML). Only after that can conversion between XML and SQL begin…ouch!

Today, manual code translation increases the risk of errors, inconsistencies, and inefficiencies, leading to incorrect or broken data workflows. In addition, considerable (and valuable!) time can be wasted away Googling and understanding slight SQL nuances between legacy and new databases.

At Datafold, we highly recommend streamlining code translation with automated tools (like Datafold’s Data Migration Agent!) to convert legacy SQL scripts, stored procedures, and business logic into the new platform's language. (C’mon, code conversion should be as easy as breathing in air!) We also recommend version controlling all data transformation/business logic code; track changes to SQL code with Git or a similar system to ensure accountability and rollback capability.

The Heart: Migration validation

The heart is the most important organ in the circulatory system, delivering blood and keeping the body alive. For a data migration, there can be no success or survival without proven migration validation.

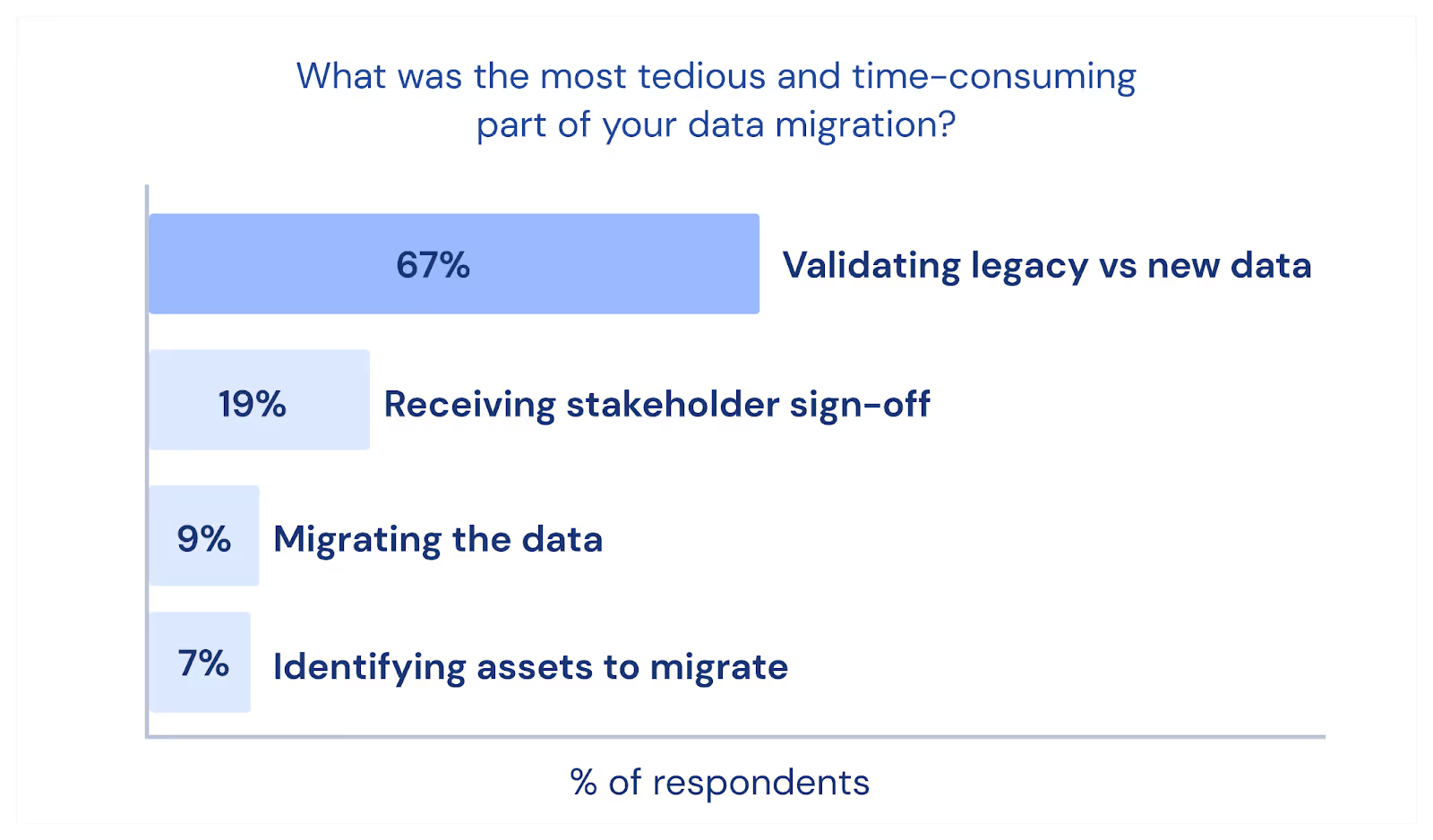

After data is moved, teams must validate the results to ensure derived data—aggregations, metrics, and value-level data values—matches between systems. 67% of respondents in our recent poll said the hardest, most tedious part of a migration was validating the legacy system’s data with their new system’s data. In this phase, you need to prove to yourself, your team, and your stakeholders that parity exists between your legacy and new systems.

A migration isn’t successful just because the data has been moved—it’s successful when stakeholders trust that the migrated data is identical to the original and data users adopt the new system.

Stakeholders don’t just want to know the data was migrated; they want evidence of parity. Without this proof, skepticism creeps in. If stakeholders doubt the accuracy of the new system, adoption stalls, the legacy system lingers, and the migration becomes an expensive bottleneck.

Validating data isn’t easy. How do you compare datasets across systems with different structures, types, and formats? Simple row counts and aggregated metrics won’t cut it—they can’t catch mismatched values or subtle discrepancies in derived metrics.

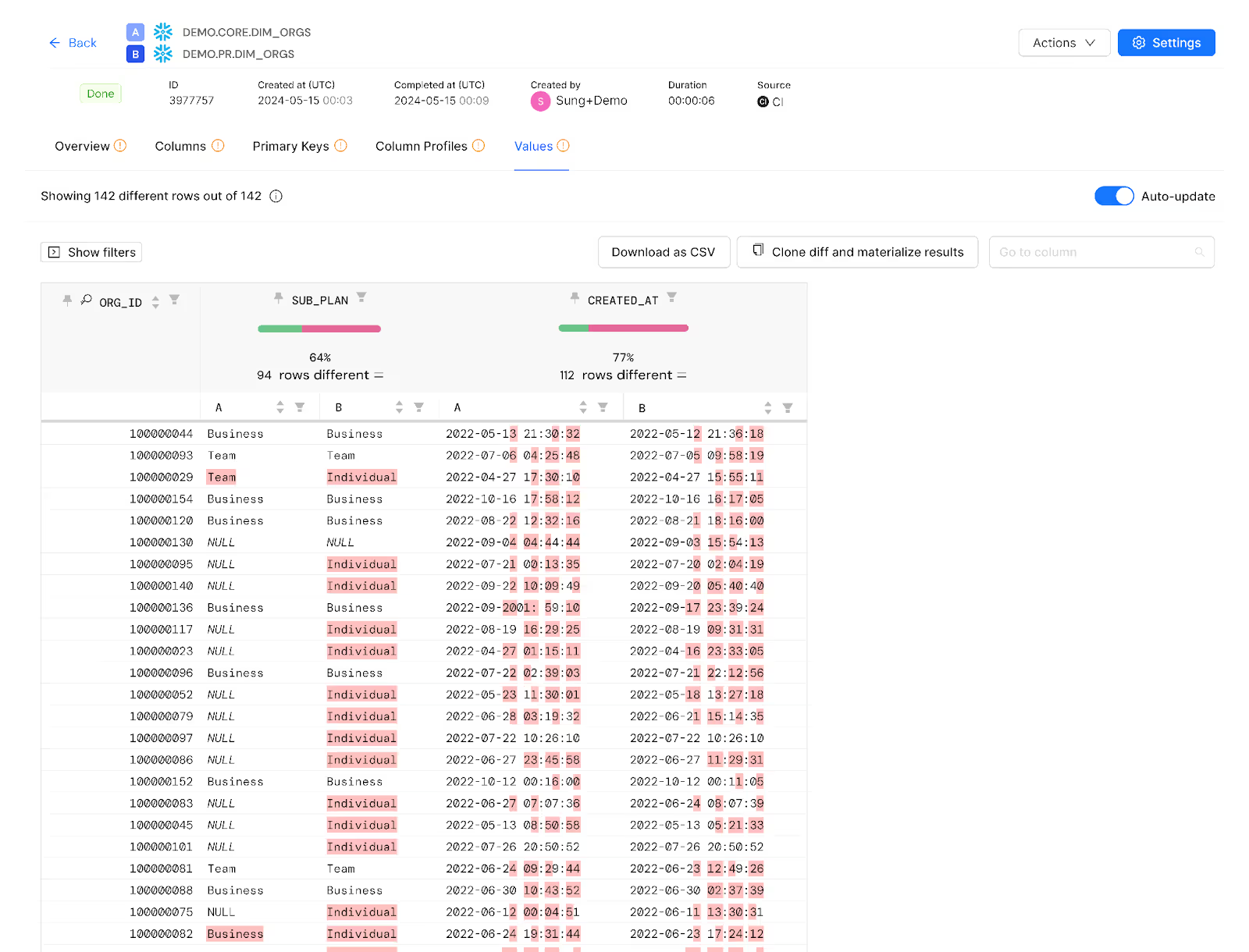

Introducing cross-database diffing: Your organs, super-powered

Like a “git diff” for databases, Data Diff compares data between source and target systems at a granular, row-level detail. It doesn’t just tell you whether the data matches; it shows exactly where and why it doesn’t.

Clear proof of parity ensures stakeholder confidence, accelerates adoption, and avoids costly delays. At Datafold, we believe data teams should do the following to confidently prove the success of a migration:

- Create a parity report: Summarize critical validation metrics, highlighting consistency across key tables and business-critical metrics.

- Visualize discrepancies: If any issues remain, use value-level data diffs to quickly identify and address remaining data quality issues.

- Gather formal approvals: Use a checklist or sign-off document to align stakeholders on the migration’s success and readiness for adoption. This could look like having a row for every migrated table in a “sign-off” spreadsheet that is reviewed by both a technical team member, and a business user who has significant context into that data.

The Liver: The people

The liver is low-key the powerhouse of the body; responsible for digestion, blood purification and blood sugar regulation, immunity—keeping the body in order. And while data migrations are undoubtedly deeply technical projects for organizations to undertake, it’s the people who define the scope, keep to timelines, and actually make a data migration happen.

While a data migration may be one of the most technically challenging projects a data team to work on, it will never be successful without the collaboration between three core units: the data team, the business stakeholders, and external consultants (if brought on).

The data team

Role: These include data engineers, analysts, and architects who handle the technical execution of the migration.

Responsibilities:

- Design and implement the migration plan.

- Extract, transform, and load data across systems.

- Perform code translation.

- Test and validate data accuracy, integrity, and performance post-migration.

- Troubleshoot issues and optimize processes.

- Ensure minimal downtime to existing systems and workflows.

The business stakeholders

Role: They represent the interests of the business and ensure the migration aligns with business goals, these may include roles like the CFO, technical marketers (e.g., Growth Marketing), and revenue analysts.

Responsibilities:

- Define requirements and success criteria for the migration (e.g., data quality, reporting needs).

- Aid in the prioritization of critical datasets, processes, and use cases for business continuity.

- Validate and approve outcomes to confirm business value.

- Provide feedback on any discrepancies or impacts on operations.

Consultants

Role: External experts who provide strategy, tools, and best practices to support the migration process. They are often brought in for larger, more complex data migrations at the enterprise level.

Responsibilities:

- Assess existing systems and recommend the best approach for migration.

- Offer specialized knowledge on tools, platforms, and data governance.

- Facilitate project management and timeline adherence.

- Provide additional resources for technical implementation or training.

- Mitigate risks and ensure compliance with industry standards.

Datafold: The blood

Datafold is the lifeblood of your migration—flowing through every part of the process, delivering energy and ensuring health. Without it, the system falters. With it, the migration thrives, bridging gaps and fueling success.

Have you noticed a pattern in where migrations tend to fail? It’s typically during the three most tedious, error-prone stages: converting complex SQL/code, validating massive datasets across databases, or proving parity to stakeholders.

These steps, while critical, are where teams face the greatest risk of delays, errors, and frustration.

Migrations are challenging but they don’t need to be chaotic. Datafold redefines the process by streamlining every stage, from planning to stakeholder sign-off. By automating the hardest parts—like code conversion and cross-database validation—we help teams avoid manual pitfalls, minimize risk, and deliver successful migrations faster, with fewer headaches.

.avif)