Running dbt with Airflow

How to setup and run dbt with airflow on your local machine.

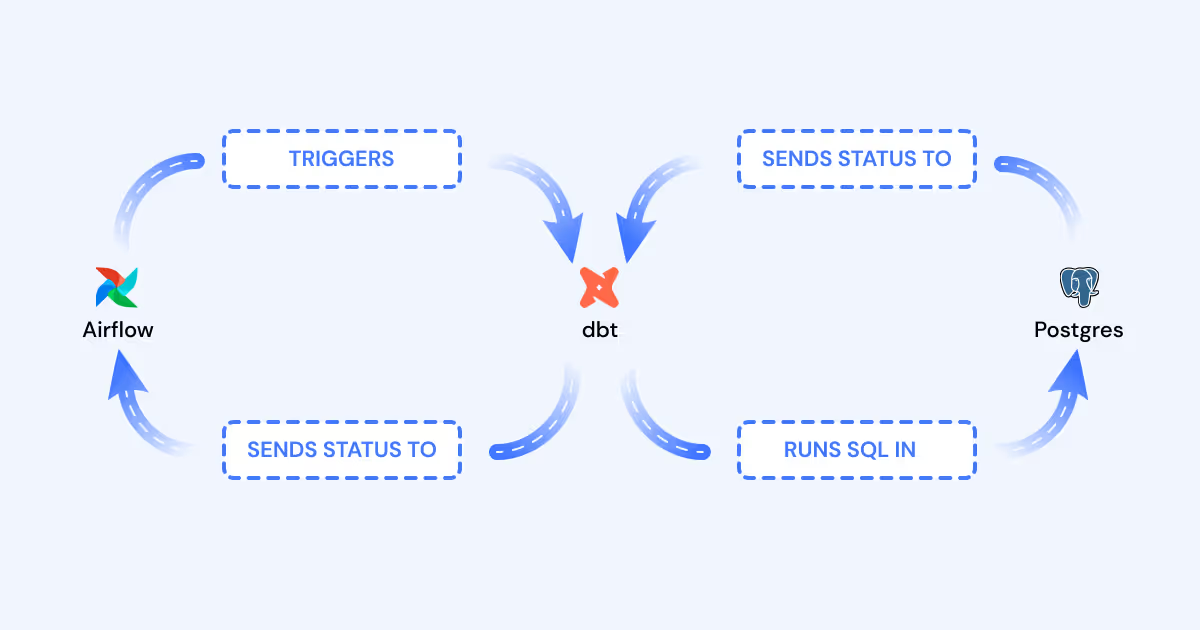

Apache Airflow is a platform for writing, scheduling, and monitoring workflows. It provides a central location to list, visualize, and control every task in your data ecosystem. It also has an intuitive task dependency model to ensure your tasks only run when their dependencies are met.

Airflow doesn’t just schedule SQL scripts. It has an expansive library of “Operators” that can make HTTP requests, connect to servers via SSH, and even run pure Python. Because of its flexibility, Airflow’s role is often not clearly understood, but its true value lies in its ability to orchestrate (trigger) every step of the data pipeline.

If you’re familiar with dbt, you know that dbt Cloud can schedule your models and tests, run them in the right order, and notify you when they fail, all without Airflow. But here’s what Airflow integration offers:

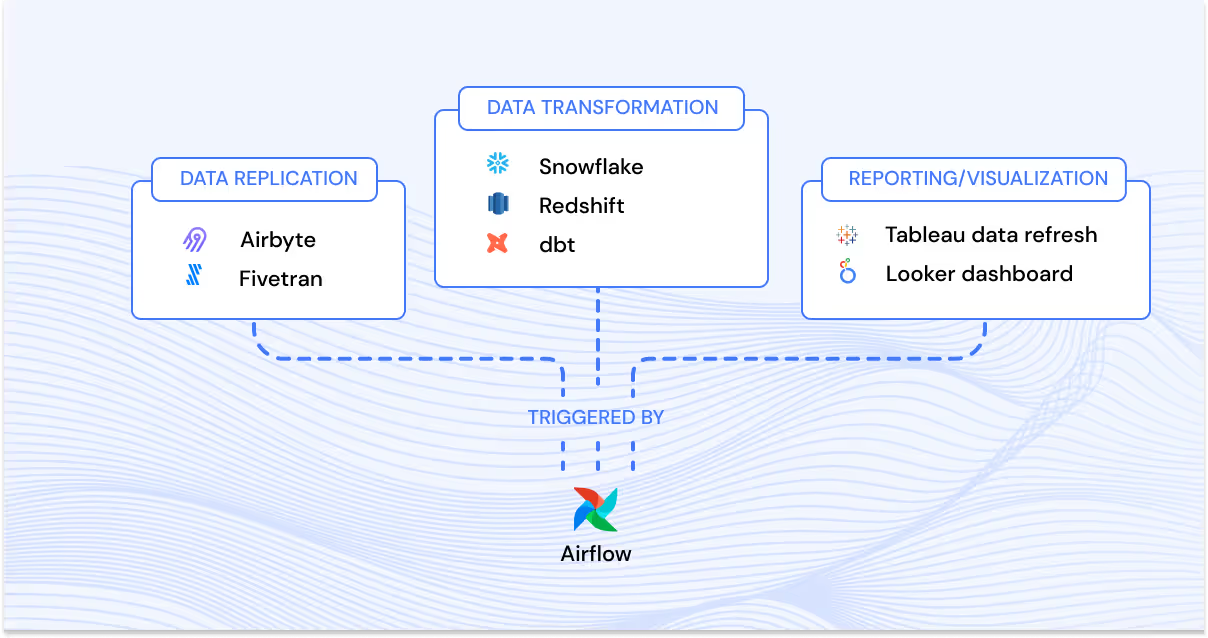

Global Task Dependency

Understand how software tasks, dbt tasks, and visualization layer tasks relate to each other.

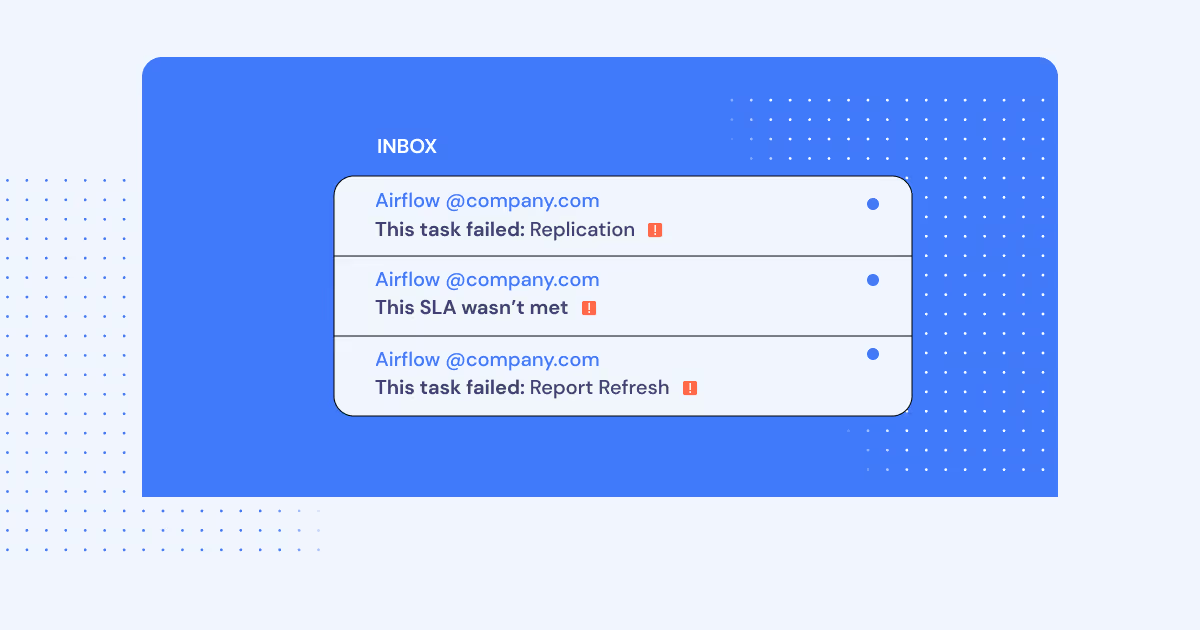

Centralized control and alerting for the entire Data Pipeline

Orchestrate tasks across systems and see where steps and tests fail.

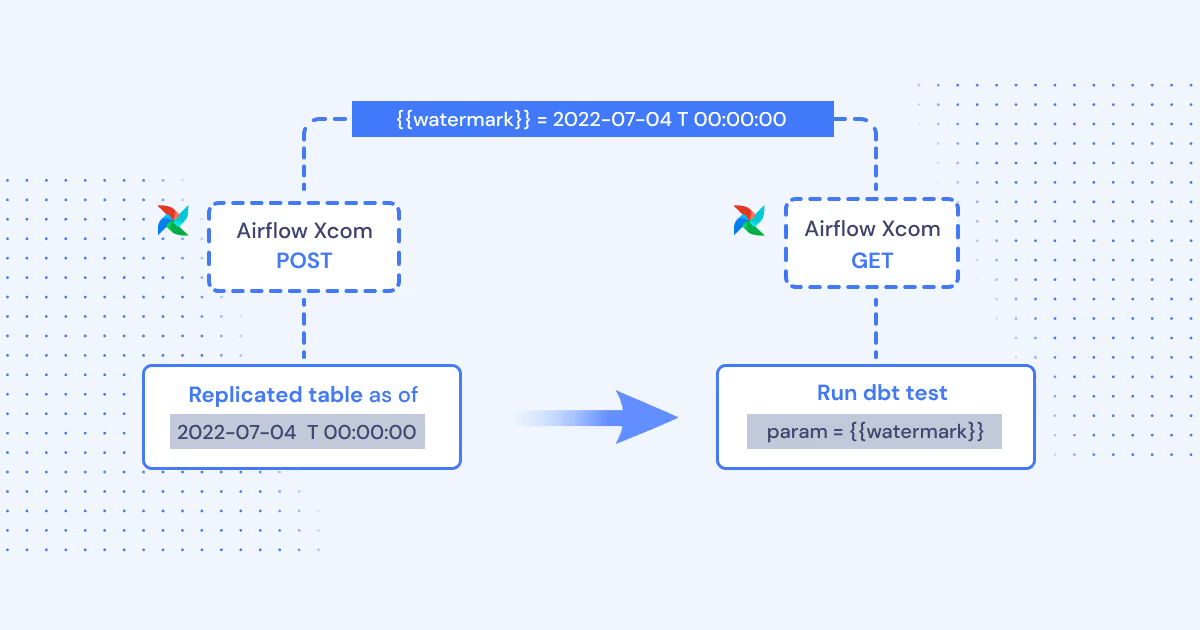

Task parameterization across systems

The results of one process outside of dbt (like the watermark of a data replication process) can be extracted and injected as a parameter into a dbt run (dbt supports injectable parameters)

To help you see exactly what it looks like to integrate dbt into Airflow, we put together some technical instructions on how to build a local demo.

Note: Don’t be intimidated by the length of this article. On the contrary, we do our best to explain what’s going on for readers that might not be familiar with software engineering.

How to setup dbt and Airflow overview

In this demo, we’re going to install a mix of command line tools and Python applications.

First, we’ll install and run Postgres with Docker. Then, we’ll install dbt, walk through the configuration, and run some of the test models that come with a fresh project. After that, we’ll install Airflow and write a DAG that can run all of your models and tests independently.

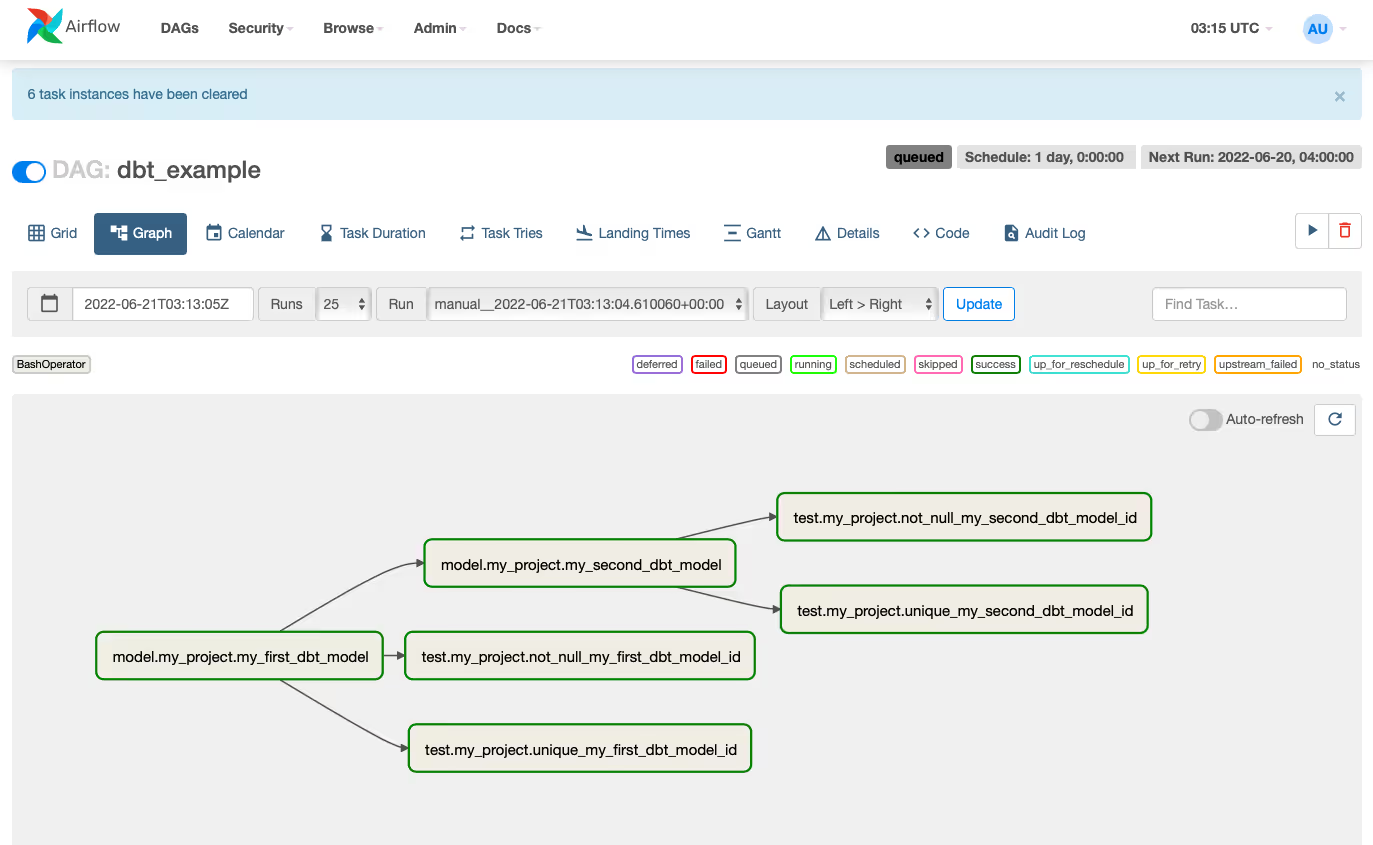

When everything is finally stitched together, we’ll trigger the DAG to run and verify that everything worked! And it should look something like this:

Project Folder

Note: This demo relies heavily on Shell commands. Code snippets that start with a dollar sign ($) are Shell commands that should be run in a terminal. However, when running those commands, omit the leading “$”. This is just a convention to help you know where they start.

Open a terminal.

In your home directory, make a folder called <span class="code">repos</span>

Developing applications from the <span class="code">repos</span> folder is a common practice for software engineers. It helps keep their code bases organized.

In <span class="code">~/repos</span>, make a folder called <span class="code">demo</span>. This is where we'll build and configure our demo.

Close this terminal.

Setup Postgres

We’ll use Postgres as our central analytics database. Typically, you would use a database better suited for big data like Snowflake or Redshift, but Postgres is free and easy to run.

Location

Open a new terminal. We will use this terminal exclusively for Postgres.

Note: Many terminal programs let you make multiple tabs and name them. If you can, name this terminal Postgres for your own convenience.

In <span class="code">~/repos/demo</span>, create a folder called <span class="code">postgres</span>:

This folder will contain installation and configuration for Postgres.

Installation

We could install and configure Postgres directly on your machine. But installing and configuring databases manually is complex. We can avoid that complexity by running Postgres in a “container”. Containers are a Linux feature that lets you run programs in an isolated environment, similar to a virtual machine (i.e. running Windows inside MacOS). Furthermore, they are easy to install, uninstall, and run, much like mobile apps.

To run containers on your machine, download and install Docker Desktop. If you’re running Linux, consult the official documentation for installing Docker Engine and Docker Compose. Once you finish the install, start Docker Desktop.

Create a file in <span class="code">~/repos/demo/postgres</span> named <span class="code">docker-compose.yaml</span>. In this file, we’ll write some configuration that makes Docker spin up an instance of Postgres.

Note: This is all one Shell command!

This configuration tells Docker to:

- Download a pre-built Postgres image

- Make Postgres available on port 5000

- Create a database named “dw”

- Create a user named “dw_user”

- Set the password to “dw_password”

Starting Postgres

Your demo folder should now look like this:

<span class="code">demo</span>

└── <span class="code">postgres</span>

└── <span class="code">docker-compose.yaml</span>

To start Postgres, run:

You should see output that looks like this:

To verify that the container is running, run:

Your should see an output that looks like this::

Later, you can turn off Postgres by running this command (but not yet!):

Leave this terminal open. We'll use it later.

Setup Python

For this demo, we will not be running dbt or Airflow in containers like we did with Postgres. The open-source version of dbt is a command-line tool. If we ran these apps in containers, the only way Airflow could run dbt-CLI commands would be to connect the Airflow container to the dbt container over SSH. SSH communication across containers is a complex topic and requires extra configuration in both Airflow and Docker. For this reason, dbt and Airflow will be installed directly on your machine.

Virtual Environments

If you’ve used an iOS or Android device, you might intuit that mobile apps are self-contained. For example, even if the Lyft and Uber apps both use a few of the same libraries, they don’t share those libraries from some shared location. The app contains everything it needs, even if this means every app on the device has a copy of the same library.

dbt and Airflow are Python Packages, and Python Packages do not work like mobile apps. Mobile apps are compiled into a single binary file, whereas Python packages are a collection of text files. You need extra software to manage the shell environment for package dependencies. For this demo, we will use Pyenv to install Python, and Pyenv Virtualenv (an extension of Pyenv) for managing Python Virtual Environments.

Note: Sometimes you can get away with not using virtual environments. However, Airflow v2.3.2 and dbt v1.1.0 have conflicting libraries, so you have to follow best practices for this demo.

Installation

Open a new terminal.

The preferred installation method for pyenv and pyenv-virtualenv is brew. To install brew, run:

Note: This may ask for sudo access. Also, because brew installs a lot of software from source, the installation may take a while.

Now that we have brew, we can install Pyenv and Pyenv Virtualenv:

After the installation, we’ll need to add a couple of things to our Shell profile.

If you’re in MacOS, run the following:

If you’re in Linux, run the following:

Note: In MacOS, the default Shell is Zsh, and its profile is located at ~/.zshrc. In Linux, the default Shell is Bash, and its profile file is located at ~/.bashrc. Every time you start a terminal, the shell runs those files to initialize the shell environment. The two commands you added initialize pyenv and pyenv-virtualenv when you start terminal.

Now, close your terminal and open a new one. Make sure pyenv works by running:

Finally, let’s install Python. For this tutorial, we will be using version 3.10.4. To install, run:

Once Python has successfully installed, close this terminal.

Setup dbt

Open a new terminal. We'll use this one exclusively for dbt.

Location

In <span class="code">~/repos/demo</span>, create a folder named <span class="code">dbt</span>:

This folder will contain installation and configuration files for dbt.

Virtual Environment

We need to install dbt in a virtual environment. This will prevent library conflicts with other Python packages on your machine.

Create a new virtual environment named <span class="code">demo_dbt</span>:

You can configure Pyenv Virtualenv to automatically activate and deactivate <span class="code">demo_dbt</span> when entering or leaving <span class="code">~/repos/demo/dbt</span>, respectively. To do this, run:

To confirm that <span class="code">demo_dbt</span> is the active virtual environment, run:

If the output says <span class="code">demo_dbt</span>, your virtual environment is active! Now, anything you install via <span class="code">pip</span> (Python’s package manager) will be installed in the virtual environment, and it will only be accessible when the virtual environment is active.

Installation

To install dbt, run:

Note: You may get an error that psycopg can’t be built because it’s missing libpq-dev.

- On Mac, you can install it via brew: <span class="code">brew install postgresql</span>

- On Ubuntu, you can install it via apt: <span class="code">sudo apt install libpq-dev</span>

Rerun <span class="code">pip install dbt-postgres</span>. The installation should complete successfully now.

Configuration

dbt needs a project to work with. Let’s initialize the skeleton of a project.

To initialize your project, run:

The init script will prompt you for a couple things:

- For project name, use my_project.

- For database, read the prompt. Make whatever entry is needed for postgres.

If it asks you for connection info, use the credentials from Postgres:

- Database: dw

- Username: dw_user

- Password: dw_password

You now have a boilerplate project in <span class="code">~/repos/demo/dbt/my_project</span>.

At this point, your demo folder should look like this:

if <span class="code">dbt init</span> didn’t ask for credentials to Postgres, you’ll need to set those credentials manually. dbt stores this information in <span class="code">~/.dbt/profiles.yml</span>. Let's populate it:

Note: This is all one Shell command!

This will configure dbt to connect to Postgres, where it can run SQL and build our DW tables!

To test whether (1) the project is structured properly, and (2) the database connections are working, run:

Your output should look like this:

Sample Model

Take a look in <span class="code">~/repos/demo/dbt/my_project/models</span>. You should see an <span class="code">example</span> folder with a couple sample models:

These sample models are included every time you initialize a project. They’re meant to be something simple you can run to try out dbt. Take a look at the <span class="code">.sql</span> files.

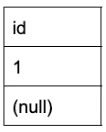

- <span class="code">my_first_dbt_model.sql</span> produces a table wth one column and two rows:

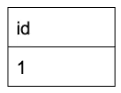

- <span class="code">my_second_dbt_model.sql</span> produces a table wth one column and one rows:

dbt run

To run these example models, run:

Your output should look like this:

Let’s verify that these models actually made tables in our database. Switch over to the postgres terminal so we can run some SQL commands.

First, let’s check if <span class="code">my_first_dbt_model.sql</span> produced a table:

Your output should look like this:

Note: psql displays NULL values as blank by default.

Next, let’s check if <span class="code">my_second_dbt_model.sql</span> produced a view:

Your output should look like this:

If your output looks correct, that means everything is working.

Go ahead and switch back over to the dbt terminal.

dbt test

A great feature in dbt is built-in Unit Testing. You can test things such as:

- Are all values for a column unique? (such as for a primary key)

- Are all values for a column not null?

The sample tests live in

To run these tests, run:

Your output should look like this:

The example purposely includes some passing tests and some that fail. This helps you get the full testing experience in a demo model.

dbt docs

dbt also has a great framework for automating model documentation. This is the part we can use to plug dbt into Airflow.

To generate model docs, run:

This last command will generate a file called <span class="code">manifest.json</span>, which we will use in the next section.

Setup Airflow

Open a new terminal. We will use this one exclusively for Airflow.

Location

In <span class="code">~/repos/demo</span>, create a new folder called <span class="code">airflow</span> by running:

Virtual Environment

Just like with dbt, we need to install Airflow in a virtual environment.

Create a new virtual environment named <span class="code">demo_airflow</span>:

Let’s make Pyenv Virtualenv automatically activate and deactivate <span class="code">demo_airflow</span> when entering or leaving ~/repos/demo/airflow</span>.

Confirm that <span class="code">demo_airflow</span> is the active virtual environment:

Installation

Before we install Airflow, we need to set a Shell environment variable called <span class="code">AIRFLOW_HOME</span> that tells the Airflow installer where to set its home folder:

Let's continue the installation. To install Airflow, run:

Note: Notice the <span class="code">--constraint</span> flag. When installing packages via pip, you can define a list of library version constraints. Airflow uses quite a few libraries, and its list of constraints is very large. For convenience, they make it available via a link.

For more information, consult the official guide to running Airflow locally.

Starting Airflow

Once installed, you can start the Airflow web server, where you can control your DAGs from a clean web UI.

To start the Airflow web server, run:

Note: To stop the Airflow webserver, go to the Airflow terminal and press “ctrl” + “c”. But don’t do this yet!

Now that Airflow is running, you can access the web server. Go to http://localhost:8080 and login. The username and password are printed by logging in the Airflow terminal. It will look like this:

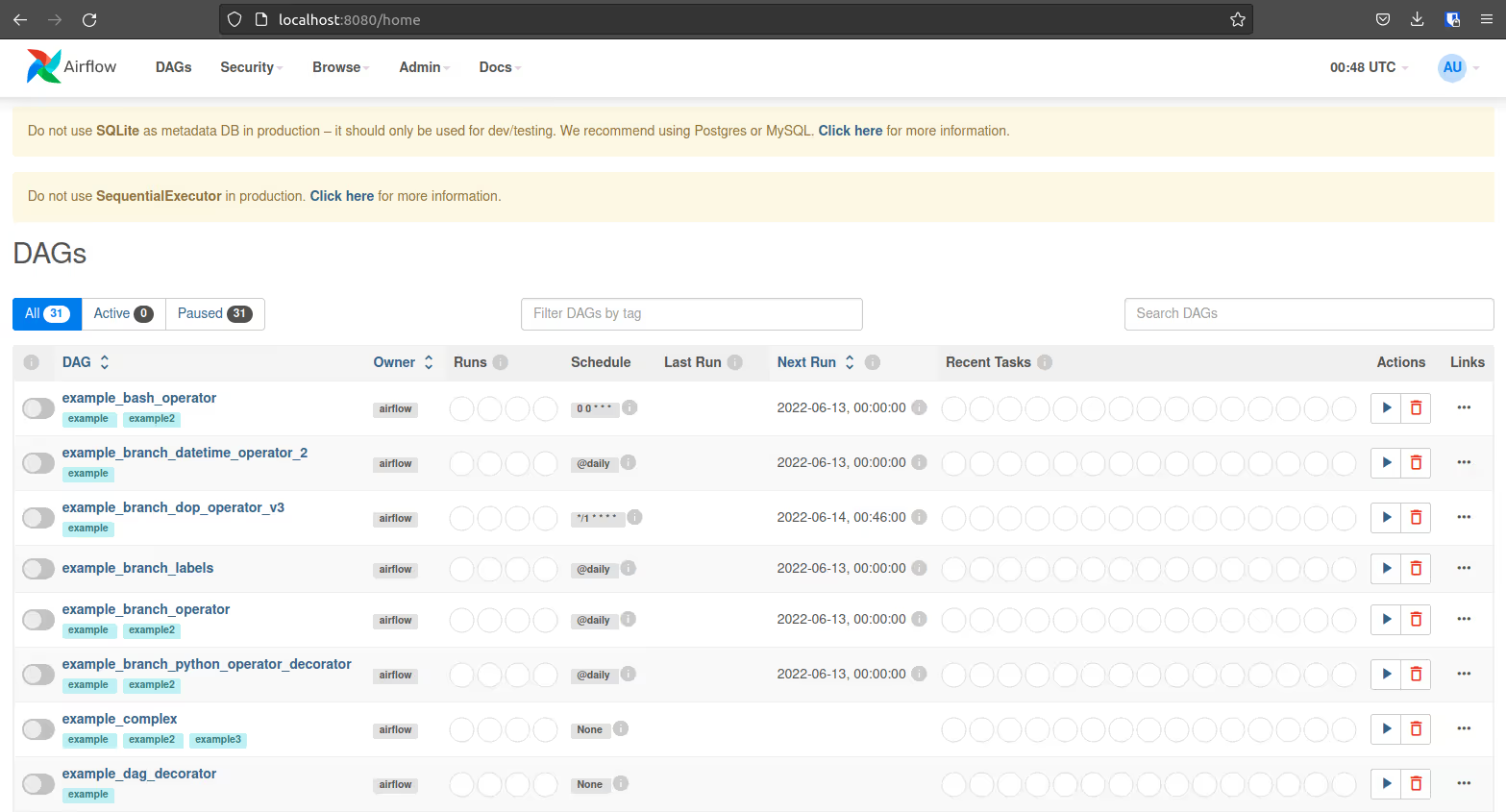

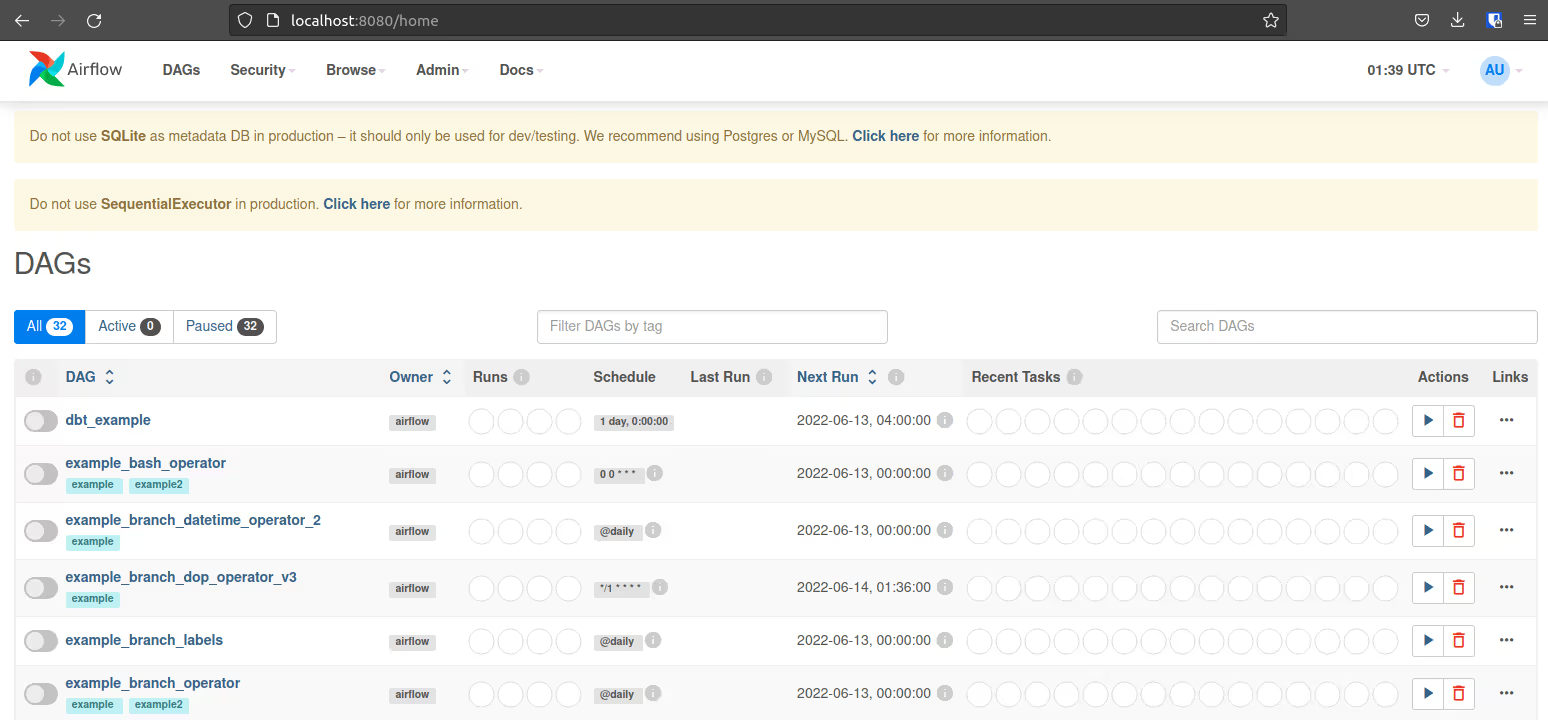

There are a few example DAGs you can explore that will help familiarize you with the UI. In the next section, we will build a DAG that runs our dbt model one table at a time.

Building the DAG

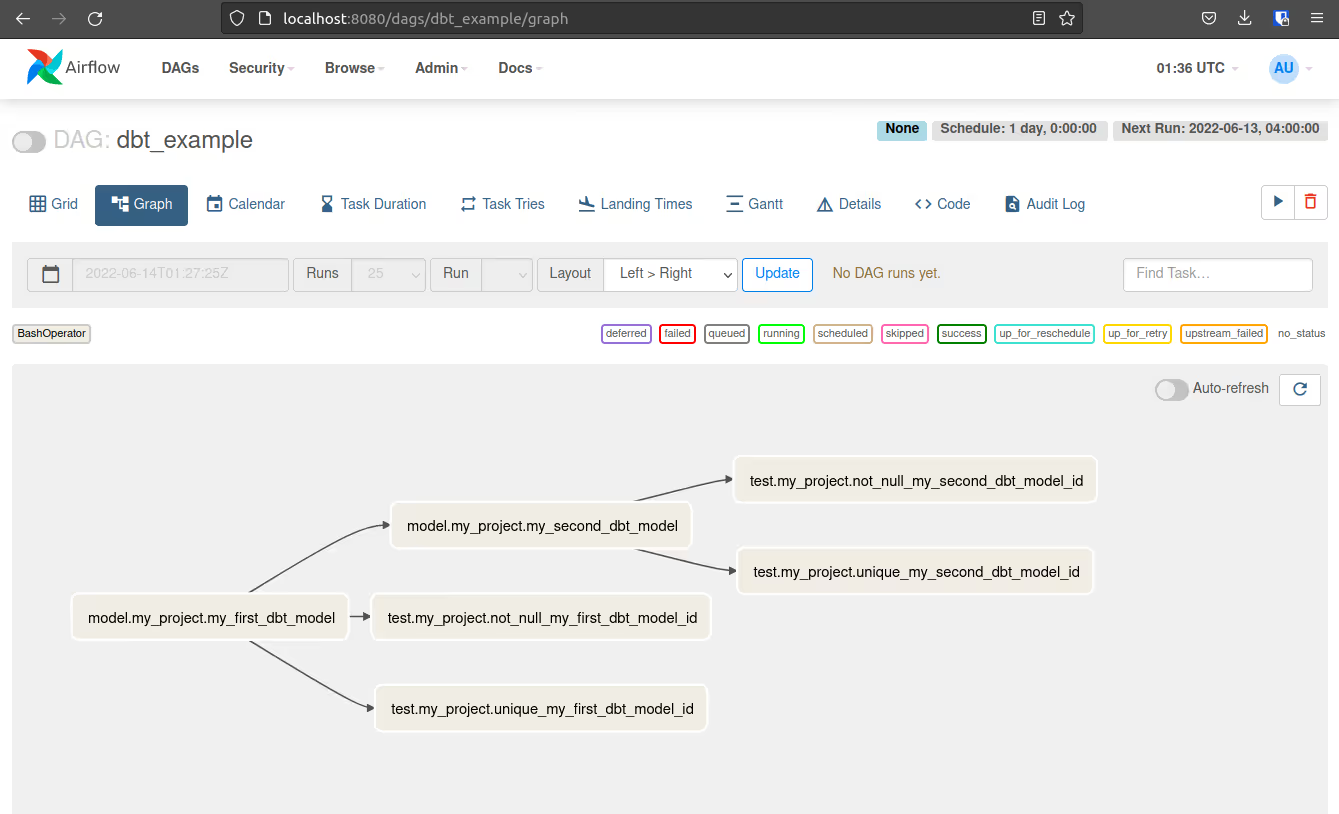

When you ran <span class="code">dbt docs generate</span>, dbt created <span class="code">manifest.json</span>, among other things. This file is very useful, as it has the name of every model, every test, and the dependency relationships between them! Let’s build a DAG that leverages this file to automate generating all the tasks.

Your Airflow terminal is busy running the web server. Open a new, throwaway terminal.

Remember that, during installation, we set AIRFLOW_HOME = <span class="code">~/airflow</span>. Airflow will look in <span class="code">~/airflow/dags</span> for new DAG files. Create the <span class="code">dags</span> folder if it doesn’t already exist:

Now let's make our DAG file:

Note: This is all one Shell command!

This program:

- Loads the <span class="code">manifest.json</span> file from dbt into a Python Dictionary.

- Creates an Airflow DAG named <span class="code">dbt_example</span>.

- Creates a task for each “node” (where node is either a model or a test).

- Defines the dependency relationship between nodes.

Save the file and wait about 30 seconds. Airflow will find the DAG and load it. When it’s ready, you should see it at the top of your DAG list:

Click on your DAG and select the graph view. Your DAG should look like this!

Editing a dbt Model and running Airflow

We’ve already run our dbt models once and verified that they populated data in Postgres. So that we can verify that our DAG runs properly, let’s edit the dbt models to produce different data.

First, update <span class="code">my_first_dbt_model.sql</span> to have more rows:

Next, update the filter condition in <span class="code">my_second_dbt_model.sql</span>:

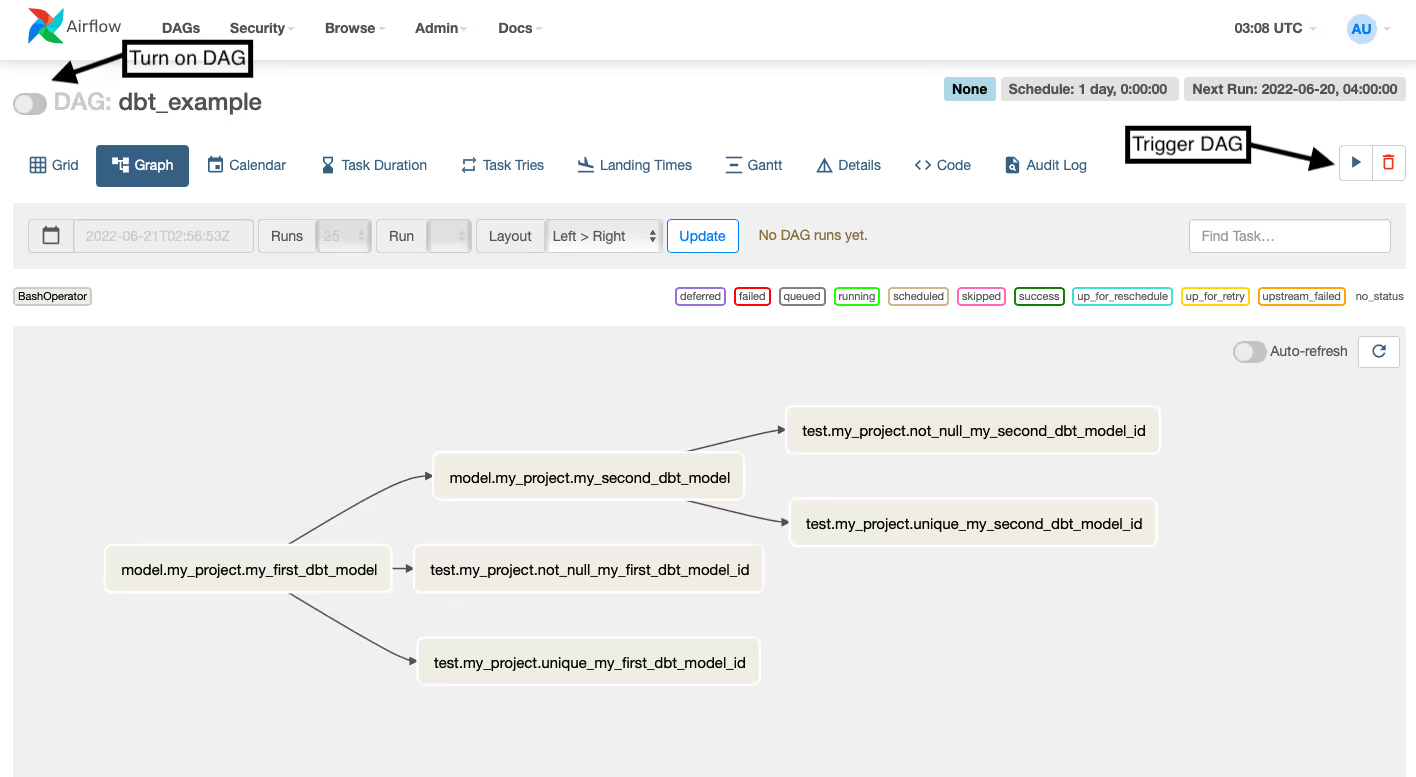

Now, turn on your DAG, enable Auto-refresh, and trigger a run.

All your tasks should complete successfully and turn green!

Let’s verify that our tables in Postgres changed.

First, check the output for <span class="code">my_first_dbt_model.sql</span>:

Your output should look like this:

Remember, psql displays NULL values as blank by default.

Next, check the output for <span class="code">my_second_dbt_model.sql</span>:

Your output should look like this:

Congratulations! You now have a working demo running dbt with Airflow.

.avif)