How to Create dbt Freshness Alerts

Learn how to turn dbt freshness tests into production monitoring alerts using Datafold.

.avif)

dbt freshness tests are a fantastic way to monitor source data, and to ensure that new records are arriving at an expected cadence. However, these tests require you to define this expected cadence, which can be difficult to manage at scale.

So how can we turn dbt tests into alerts that are constantly monitoring production?

For example, one might configure an event source to expect new records every day, like so:

Great! We’d definitely expect page events every day, probably expect to see new users sign up every day, and maybe expect our new feature configuration event to occur every couple days.

After digging around the events data, we realize that the daily period needs a little tuning, and we update the freshness thresholds to match reality. Users sign up every day, page views events should arrive every 12 hours, and our new feature configuration occurs once a week.

There’s nothing inherently wrong with this, but it’s incredibly manual, and could change over time. What if adoption of our new feature increases dramatically? What if it’s a flop and usage declines even further? Either scenario would require us to manually update the freshness period, and could result in false alarms or silent failures.

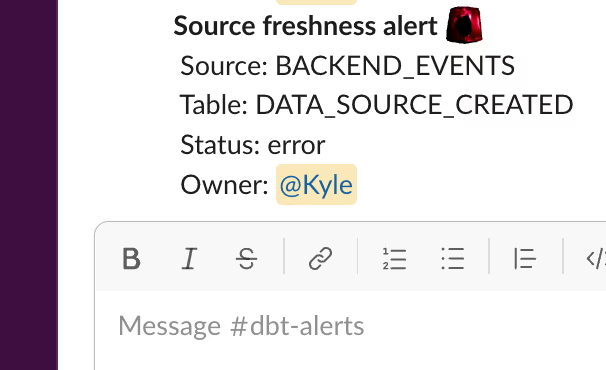

Just the other day, I woke up to a Slack alert that a source table was stale. 😱

My work was interrupted by the alert as I frantically scrambled to investigate. I ran some ad-hoc queries, only to discover that the freshness period on this event simply needed to be updated. To close the loop, I opened a pull request to update the test, which required attention from a colleague. All of which added no real value to the business, and only served to distract from higher priority tasks. I’ve personally had this scenario happen to me at least a dozen times.

A potential downside of rigorous testing is alert fatigue. An overwhelming number of alerts, most of which are false alarms, trains the people responsible for responding to become desensitized, and ultimately ignore alerts.

What if . . . you could dynamically configure expected thresholds, and never have to deal with noisy alerts or manually determine thresholds again? Datafold alerts to the rescue!

Datafold alerts utilize an ML-based forecasting model that adapts to seasonality and trends in your data to create dynamic thresholds. You can adjust the sensitivity or add custom anomaly detection to optimize your signal-to-noise ratio.

Ok! So Datafold has a cool chart thingy that can detect anomalies - how can we use this to detect stale data?

High level steps:

- Create a dbt snapshot of the information schema to record table metadata over time

- Write a SQL query to summarize table changes

- Put query results into a Datafold alert

Create a dbt Snapshot of the Information Schema

Add the information schema as a source. In this example, I’m targeting our `analytics` database.

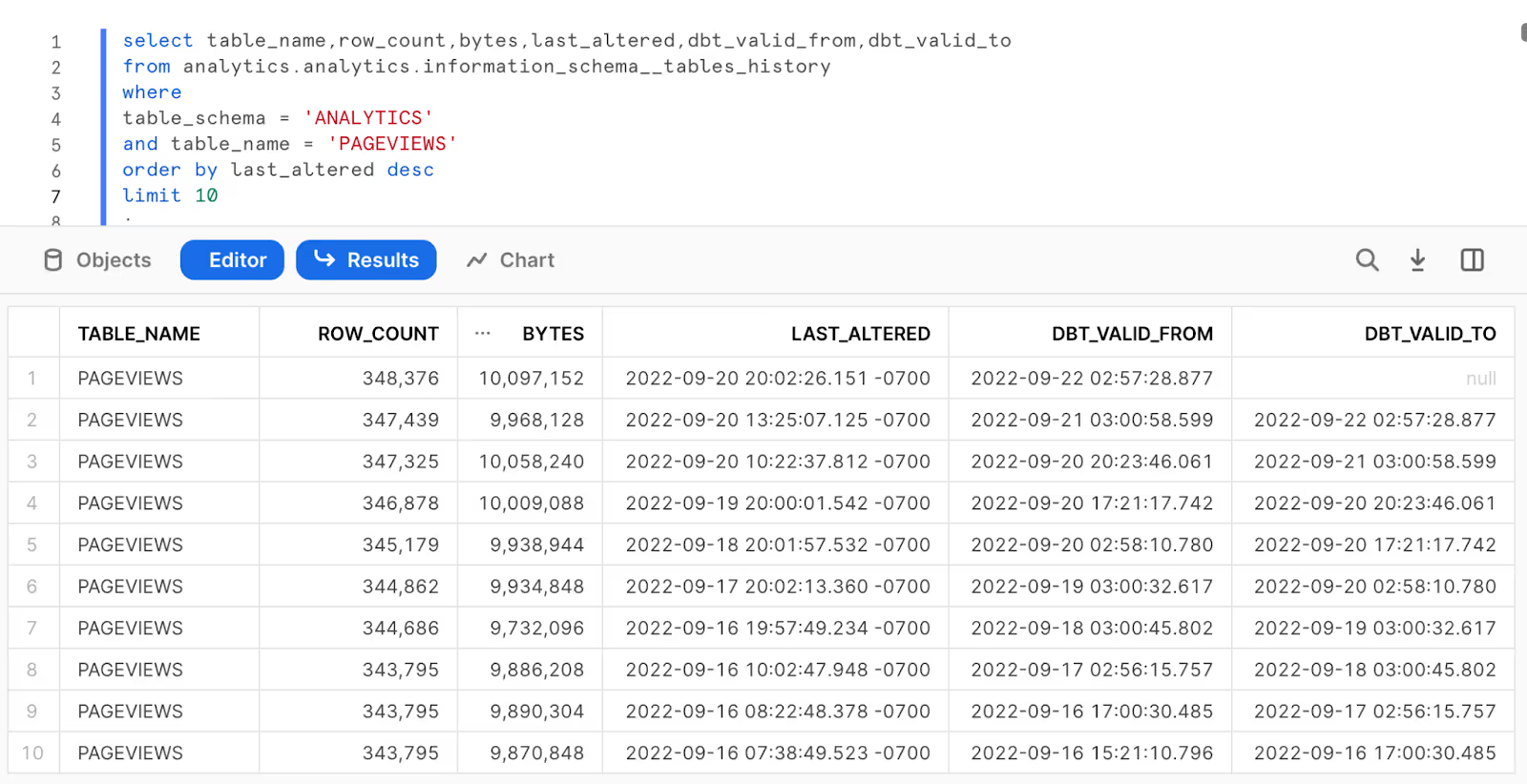

Create a snapshot of the information schema tables source. This will allow us to see table metadata over time.

Each update to the table will be captured in our snapshot

Write a SQL Query to Summarize Table Changes

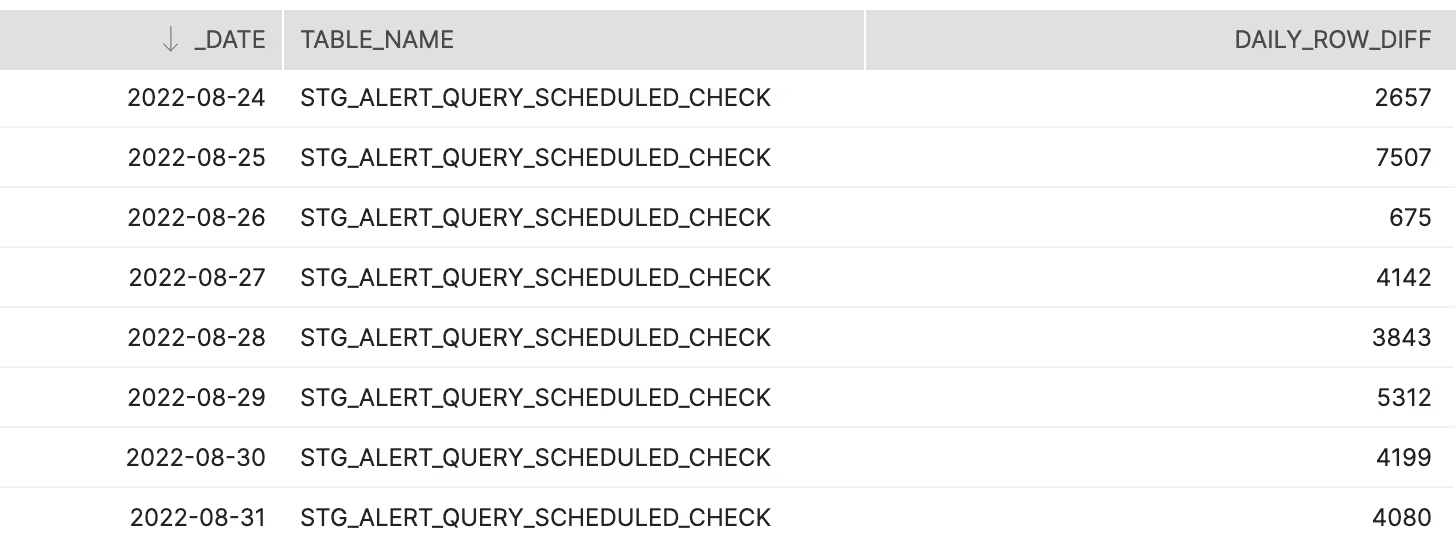

I was interested in changing row counts per table, so this query focuses on changes in table record counts from day to day.

Example output with date, table name, and the daily change in record counts:

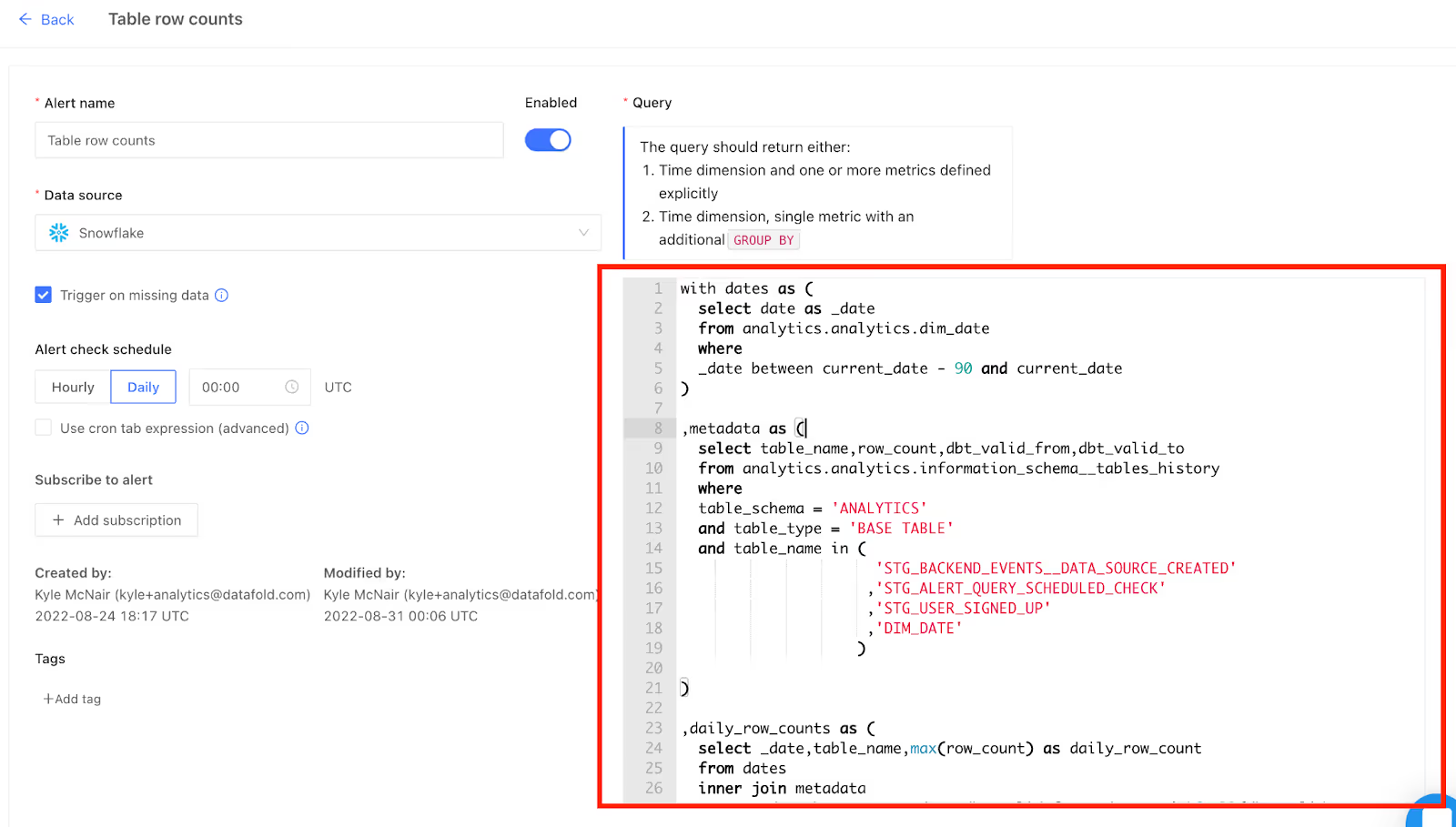

Put Query Results into a Datafold Alert

Finally, creating a Datafold alert is as simple as dropping the above query into the Datafold alert UI. To properly scale, it might make sense to park this query in a dbt model.

Voila!

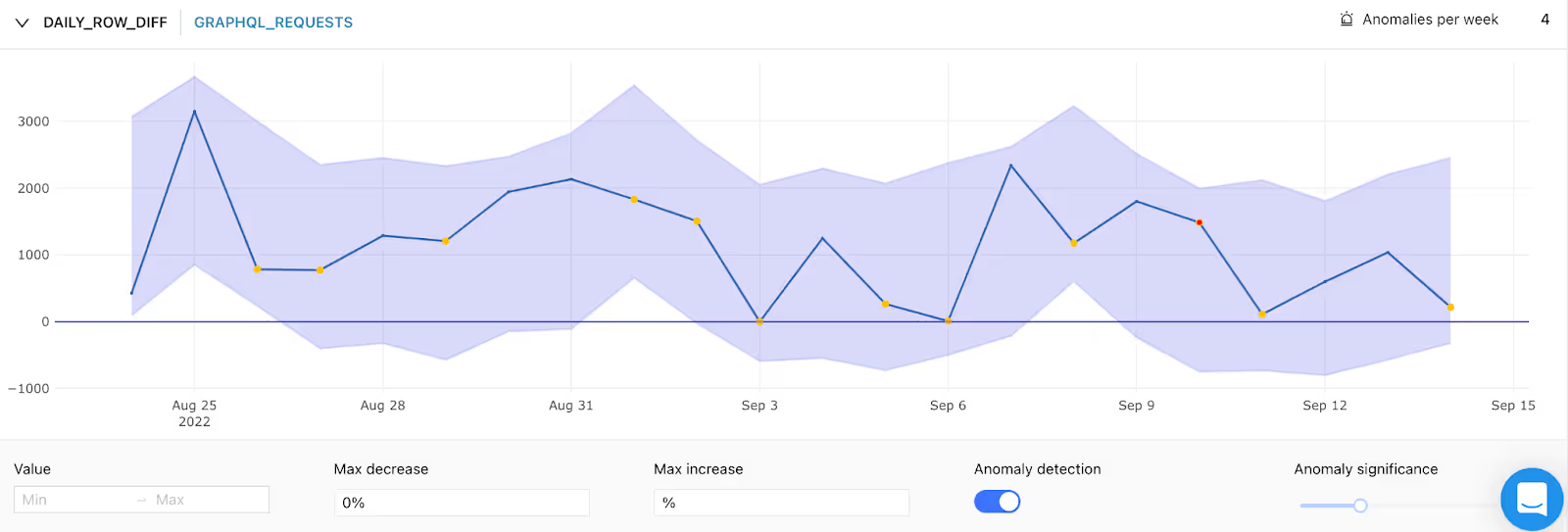

Table with thousands of new daily records:

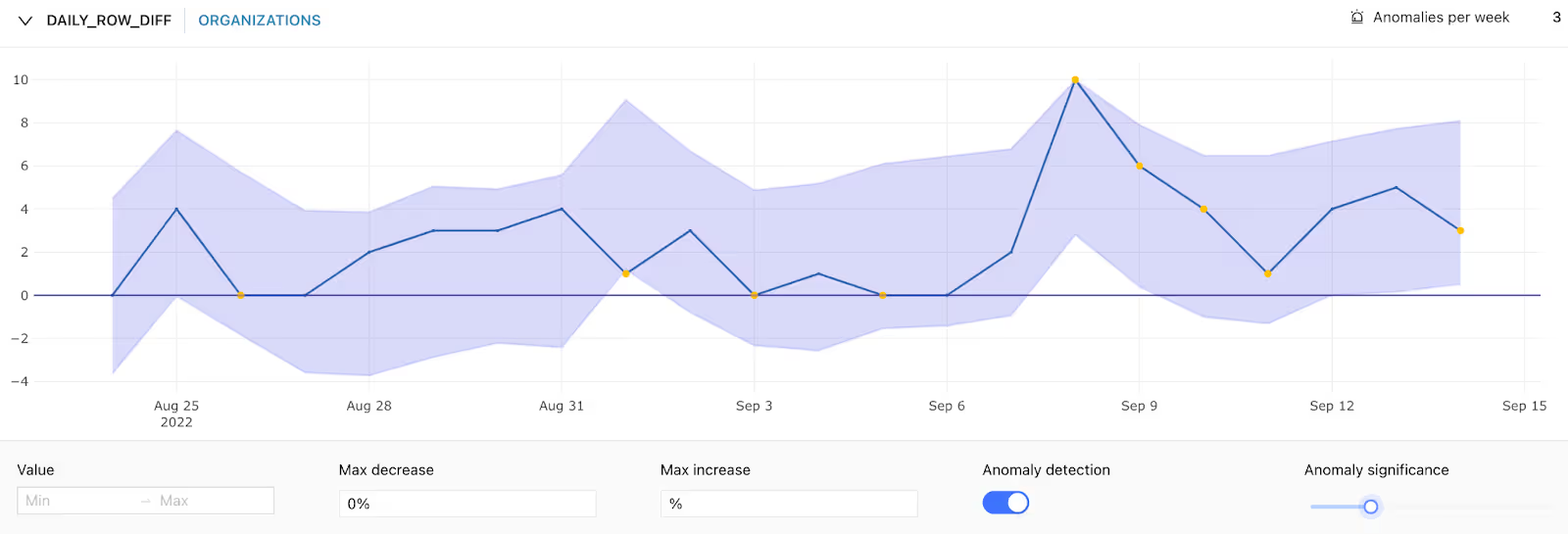

Table with few new daily records:

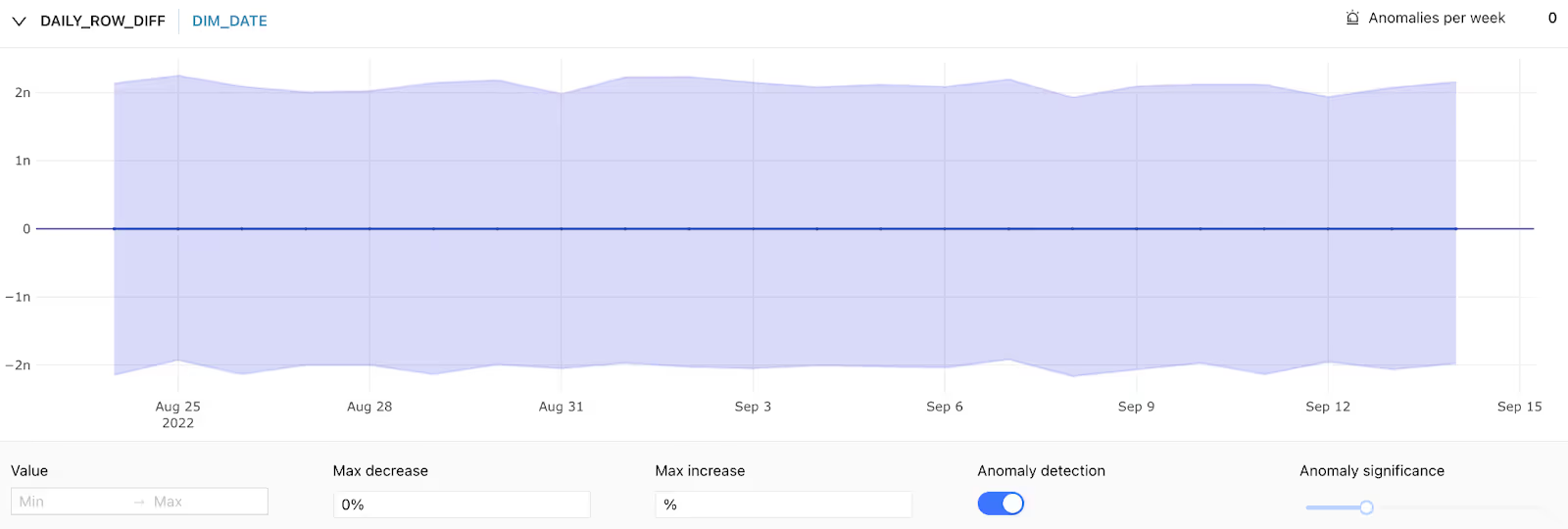

Table that should never change:

You now have alerts with dynamically determined thresholds, and can subscribe to Slack alerts or emails with just a few clicks.

Notes:

- This method only applies to materialized tables. Views have no record counts, and thus we can’t detect changes in row counts for views.

- This guide is Snowflake specific, but could be easily adapted to the information schema of another database type.

.avif)