Data Migration Risks and How To Avoid Them

Data migration mistakes lead to lost records, broken reports, and compliance risks. Learn key pitfalls and how to prevent them with planning, automation, and validation.

Your team has been preparing for months. The database migration is scheduled, stakeholders are aligned, and everything seems on track. Then, the first migration reports come in.

- Some records don’t match.

- Key fields are missing.

- Dashboards that worked perfectly before are now throwing errors.

The IT lead scrambles to diagnose the issue while leadership demands answers.

- "Where’s the customer data?"

- "Why are transactions missing?"

What should have been a routine migration has turned into a crisis, leaving teams stuck troubleshooting instead of moving forward.

This scenario isn’t hypothetical. This is exactly how it played out for TSB Bank in 2018 in one of the most significant IT disasters in UK banking history.

The real reason data migrations fail

Data migrations sound simple—move information from one system to another while keeping schemas, formats, and relationships intact. Relatively straightforward, right? Well, anyone who’s been through one knows unexpected failures happen at every stage.

The real problem is that most failures don’t happen all at once, they build up over time as your team manually translates code and attempts to validate data movement across databases. A single mismapped field can break foreign key relationships, corrupt dependencies, and trigger cascading data integrity issues. Poorly handled type conversions may distort financial figures, while inefficient migration pipelines introduce duplicate records and incomplete datasets.

Without rigorous validation, a migration can introduce inconsistencies that disrupt compliance, degrade performance, and erode trust in business intelligence. A successful migration transfers data but also maintains its structure, integrity, and usability in the new system. Back to the TSB Bank story, the migration introduced millions of data inconsistencies, including mismapped customer records, incorrect balances, and unauthorized transactions, which compounded daily, spreading like a contagion through reports, customer service queues, and compliance audits.

Common migration risks and how to prevent them

Even a well-planned migration can run into issues if you don’t account for key risks. Here are some of the most common pitfalls and how to mitigate them:

A smooth migration keeps your data structure intact, your queries running, and your systems performing like they should. But small issues—like unhandled NULL values, chopped-off strings, or mismatched encodings—can quietly mess things up, causing unexpected problems later.

To avoid these headaches, make end-to-end validation a priority. Check for schema drift, run automated integrity checks, and audit your data after migration. Stay ahead of issues with proactive monitoring so you can catch problems before they cause real damage. That way, your migrated data stays accurate, reliable, and ready to use.

The best ways to minimize data migration risks

Unfortunately, crossing your fingers won’t cut it. Without the right safeguards, a data migration can leave you with a healthy pile of (avoidable) headaches. Start by focusing on validation, monitoring, and performance optimization to reduce risks and keep your migration on track.

Manual processes make these risks even harder to manage. A migration that should take weeks can drag on for months, forcing teams to waste time fixing preventable errors instead of moving forward. Automating validation, schema mapping, and performance monitoring reduces downtime, minimizes errors, and keeps projects on schedule—freeing up engineering resources for higher-impact work.

Why planning ahead is key to a smooth data migration

A migration that starts without a plan will only end in frustration. Legacy and modern systems rarely align perfectly, and even small differences can cause major issues if they aren’t caught early. The more groundwork you do upfront, the fewer surprises you’ll deal with later.

- Run a pre-migration audit: Don’t assume old and new systems will play nice. Identifying structural differences ahead of time helps prevent broken queries and missing constraints.

- Map schema and metadata changes: Formatting mismatches, missing fields, and unexpected type conversions can all throw off reporting. Sorting these out before migration keeps data intact.

- Perform a dry-run migration: Testing in a non-production environment lets you catch errors before they hit live systems—when fixes are easier and far less disruptive.

Manual data migrations aren’t just tedious—they take time away from strategic projects. Teams get stuck debugging mismatches, rewriting queries, and manually verifying datasets instead of working on near-term innovation. Without automation, migrations drag on, delaying AI adoption, data-driven initiatives, and other business-critical priorities.

Hypothetical example: Imagine a global retail chain planning to migrate its inventory management system to a modern cloud-based platform. The migration team assumes the new system could handle product SKUs, warehouse locations, and stock levels in the same format as the legacy database.

What they don’t account for is a difference in how each system handled product variants. The old system stores different sizes and colors as separate SKUs, while the new system groups them under a single parent SKU with attributes.

During the migration, millions of product records are misclassified, and warehouse stock levels don’t match what customers see online. The issue isn’t caught during testing, and when Black Friday arrives, chaos ensues:

- Online shoppers see items in stock that aren’t available

- Warehouse workers can’t locate products because SKU mappings have changed

- Thousands of orders have to be manually fixed, causing shipping delays

- Refund requests spike, and social media complaints explode

Had the company performed a pre-migration audit and a dry-run migration, they would have caught the mismatch in SKU handling early—before it cost them millions in lost sales and customer trust.

How automation prevents migration failures before they happen

Catching migration errors before data moves is the easiest way to avoid costly fixes down the line. Relying on manual SQL checks is like looking for a single typo in a thousand-page book—it’s slow, exhausting, and still won’t catch everything. Hidden mismatches, missing records, and schema inconsistencies often slip through, only to cause major disruptions later.

Automated cross-database data diffing solves this problem. Instead of hoping everything lines up, automated validation compares every row, column, and value between the source and target databases before cutover. It flags discrepancies in data types, primary keys, foreign key constraints, and precision mismatches. If a column in the destination system is unexpectedly truncated due to schema drift or if nullability rules aren’t honored, automation catches it before data loss or integrity issues reach production.

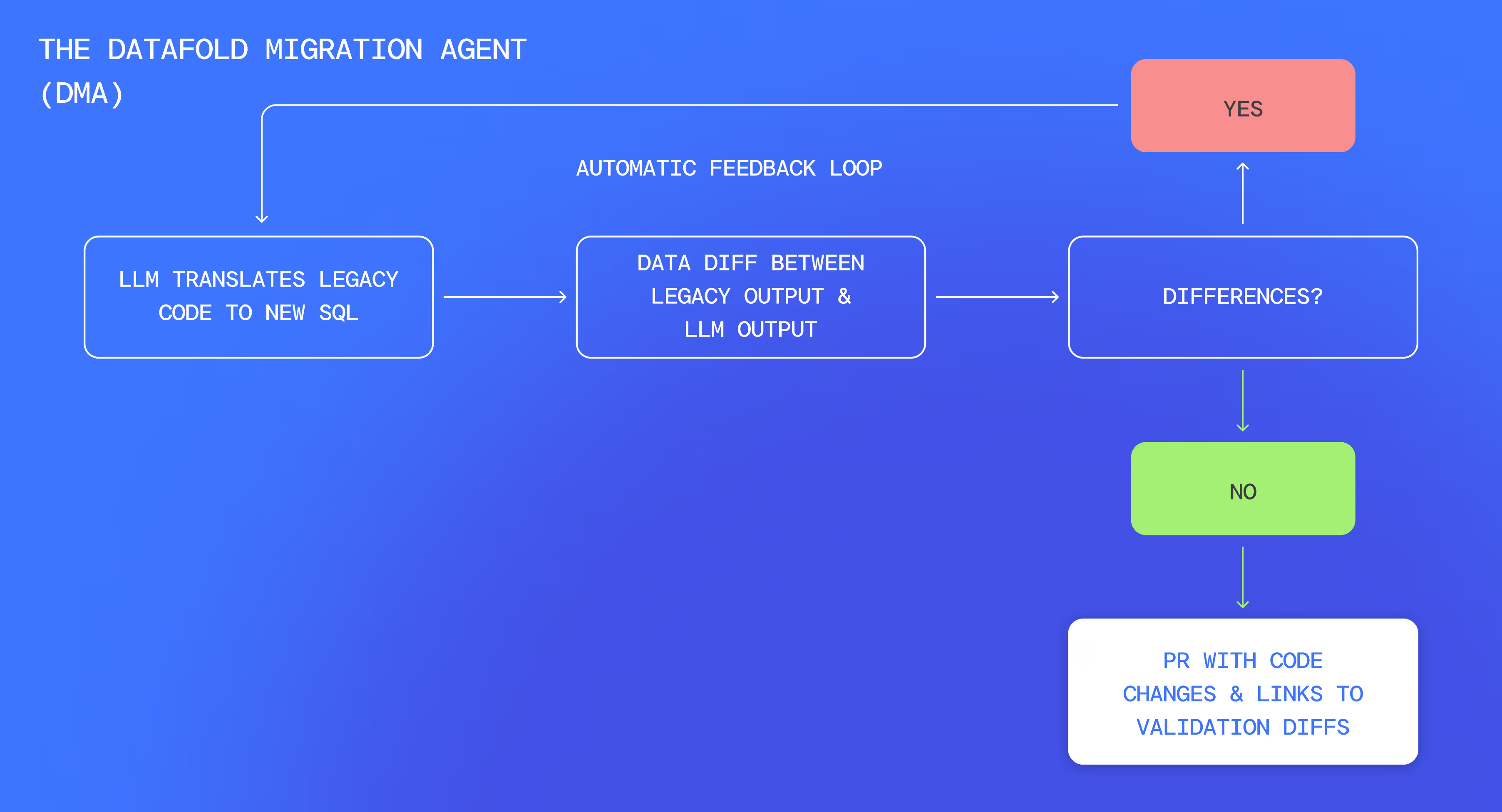

That’s exactly what Datafold’s Data Migration Agent (DMA) does. It automates both code conversion and data validation, continuously fine-tuning until 100% parity is met. Instead of relying on manual reviews, DMA ensures data accuracy, completeness, and consistency before, during, and after migration.

Here’s how it works:

- Code conversion: DMA translates SQL and transformation logic from the legacy system to the new database.

- Value-level comparison It validates the output of the translated code against the legacy system to detect mismatches.

- Iterative fine-tuning: If discrepancies exist, DMA adjusts the code and repeats the process until full data parity is achieved.

Row-level and column-wise validation

DMA doesn’t just validate data—it fine-tunes it until everything aligns. Instead of relying on manual SQL checks, it compares datasets at both row and column levels, catching inconsistencies before they impact reporting or analytics. Its iterative validation process confirms every value matches before cutover, reducing the need for time-consuming post-migration fixes.

Hidden migration pitfalls and how to prevent them

Even the most carefully planned migrations can introduce errors. Some problems are obvious—like a failed data load or missing records. Others remain hidden until they start skewing analytics, breaking reports, or triggering compliance issues. These silent failures often go unnoticed until they’ve already caused significant damage.

Why hidden errors are the costliest mistakes

Consider a company migrating financial records to a new system. Everything looks fine—until someone runs a revenue report and notices discrepancies. A seemingly minor rounding inconsistency has quietly altered thousands of transactions, throwing off revenue calculations and financial projections.

Without row-level validation, these errors spread across reports and decisions, compounding over time. The longer they go undetected, the harder they are to fix—turning what could have been a quick adjustment into a major cleanup effort.

Preventing silent migration failures

The best way to avoid these pitfalls is to validate everything at every stage of migration. Relying on assumptions or surface-level checks isn't enough—data integrity must be actively verified.

- Source-to-target data comparisons: Every row, column, and value should match between your original dataset and its new home.

- Schema alignment checks: Misaligned data types, missing constraints, and dropped columns can introduce inconsistencies that don’t always trigger immediate failures.

Data teams that prioritize data validation and monitoring avoid costly post-migration fixes. With clean, reliable data from day one, they can make confident decisions without second-guessing their numbers.

Migrate without the guesswork and eliminate risk

Data migrations succeed when there’s a clear strategy in place—not when teams rush through the process and hope for the best. Skipping validation might seem like a shortcut, but fixing errors after go-live is time-consuming, expensive, and disruptive to business operations. The key to a smooth migration is getting ahead of problems before they happen.

A smarter approach starts with pre-migration planning and schema validation. Aligning data structures before anything moves helps prevent last-minute surprises that can derail the transition. Automated code translation and validation tools like Datafold’s DMA catch and resolve inconsistencies early, ensuring that every row, column, and value will transfer correctly.

Post-migration, real-time monitoring adds another layer of protection, flagging anomalies before they cause reporting failures, broken queries, or compliance risks. Instead of scrambling to fix errors after the fact, teams can move forward with confidence, knowing their data is accurate, complete, and ready for use.

With automated validation in place, there’s no wasted time troubleshooting inconsistencies—just a smooth, controlled migration that works as expected. Don’t let hidden migration failures derail your data strategy. Discover how DMA provides complete visibility and accuracy at every stage—request a demo to see it in action.

.avif)