Data pipeline monitoring: What it is and how to implement data quality testing

Discover how data pipeline monitoring and shift-left testing catch data quality issues early, ensuring integrity and preventing costly downstream errors.

Data pipelines get disrupted for any number of reasons: Unexpected schema changes, bad code updates, and data drift, all of which can lead to broken data transformations, inaccurate insights and bad business decisions. This is where data pipeline monitors come in. They track key aspects like freshness, schema, and overall quality as data moves through stages like ingestion, transformation, and storage.

A shift-left or upstream approach catches problems—like schema changes, data anomalies, and inconsistencies—before they propagate, preventing costly fixes and bad business decisions. I’ll discuss how you can shift-left your monitoring efforts with four key data pipeline monitors: data diff, schema change detection, metrics monitoring, and data tests.

What is data pipeline monitoring?

Data pipeline monitoring is the practice of ensuring the integrity and reliability of data as it moves through various stages, from ingestion to transformation, storage, and eventual use.

Traditionally, many teams have taken a reactive approach to monitoring, addressing data quality issues only after they’ve caused disruptions downstream.

However, by shifting monitoring left—or moving data monitoring more upstream—teams can proactively catch issues before they affect reports, dashboards, or decision-making. This approach minimizes disruptions and ensures data quality is maintained from the very beginning of the pipeline.

Why data pipelines need monitoring

Without effective monitoring, bad data—such as incomplete, outdated, or incorrect records—can flow through the pipeline unchecked. Data inconsistencies can be difficult to trace back to their origin, making it even harder to resolve issues after they’ve surfaced downstream.

Even worse, problems are often only discovered after they’ve caused disruptions. For example, a schema change or an unexpected anomaly may not be noticed until an analytics dashboard breaks, leading to reactive firefighting. By the time an issue is identified, it may already have propagated through various systems, causing widespread impact.

Reactive vs. proactive monitoring

Monitoring is inherently reactive because it only identifies issues after they have already happened (but ideally before they cause disruptions, such as broken reports or failed analyses).

Proactive data monitoring flips this approach. Instead of waiting for issues to surface downstream, data teams implement monitoring early in the pipeline—known as upstream or shift-left testing. This allows them to catch potential problems at the source before they propagate and affect other parts of the data system. An example of this would be running cross-database checks between an upstream OLTP database and your OLAP database to ensure ongoing data replication is working as expected.

Proactive monitoring has become critical as data pipelines grow more complex, with data often coming from multiple sources and feeding into numerous systems.

Shift-left testing in data pipelines

As data engineers, we’re only just starting to embrace and integrate software engineering principles in our code. We’re big proponents of shift-left testing here at Datafold–a very simple but powerful idea that has changed how we think about data quality monitoring entirely.

What is shift-left testing?

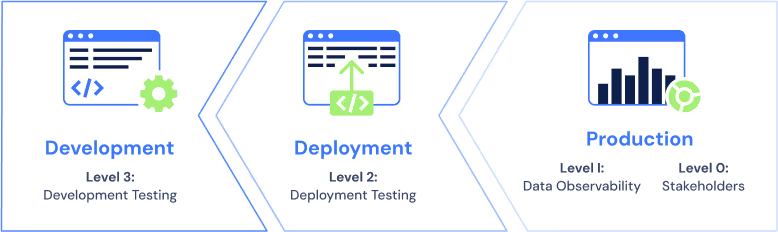

Shift-left testing originated in software engineering as a way to catch bugs earlier in the development process. Rather than testing software right before deployment, teams test throughout development, ensuring that issues are caught before they become harder (and more expensive) to fix.

Applying shift-left testing to data pipelines

In data engineering, shift-left testing means moving data quality checks earlier in the pipeline. Instead of focusing monitoring efforts at the data warehouse stage—after data has already been transformed and loaded—shift-left testing ensures that issues are detected as soon as data enters the pipeline. This is critical because data can come from multiple sources, and any issues at the source can affect the entire system if not addressed quickly.

The concept of shift-left testing is simple but powerful: test early, test often, and automate as much as possible. Data engineers can integrate proactive monitoring into their pipelines using automated tools, ensuring that data quality is continuously validated from the moment data enters the system.

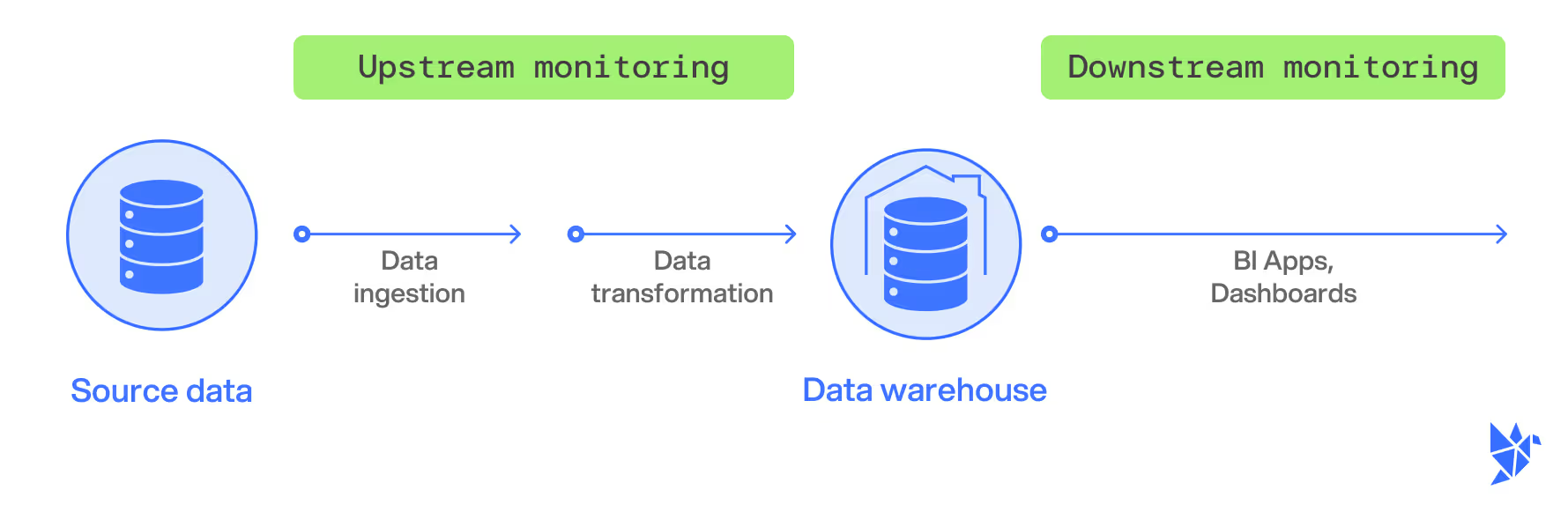

Upstream vs. downstream testing

When it comes to data pipeline monitoring, there are two primary approaches: upstream and downstream testing. Each has its merits, but proactive data quality management requires prioritizing upstream testing in addition to downstream testing.

The limits of downstream testing

Downstream testing has remained the default for too long. It happens at the end of the pipeline, after data has been processed and stored in the warehouse—and exposed in downstream applications.

While downstream testing can still catch issues, by the time they’re identified, the problems have often already caused damage. A small error at the beginning of the pipeline could lead to incorrect reports, delayed insights, or even faulty business decisions. Fixing these issues is more complex and costly because they’ve already propagated through multiple systems.

Why upstream testing is critical

Upstream testing occurs early in the pipeline, usually at the point of data ingestion or during the initial transformation stages. The primary advantage of upstream testing is that it catches issues before they have a chance to spread downstream. Problems like schema changes, missing values, or anomalies can be identified and resolved quickly, preventing them from impacting downstream users or business reports.

By catching issues early, upstream testing reduces the "blast radius" of errors and limits the need for complex troubleshooting later.

Four essential monitors for proactive monitoring

To effectively implement shift-left testing, four key monitors should be set up early in the data pipeline. These monitors help catch issues as soon as they arise, ensuring that problems are addressed before they can affect downstream processes.

Data diff monitors

Data diffs are like a “git diff” for your data. They compare two datasets—often from different stages in the pipeline, like source and destination systems. These monitors catch discrepancies in data between systems, which is especially important in environments with OLTP (Online Transaction Processing) and OLAP (Online Analytical Processing) systems.

By identifying mismatches early, data diff monitors prevent corrupted or inconsistent data from moving forward. This is one of the most “shift-left”/upstream monitors you can create for your data pipelines.

Schema change detection

Schema changes, such as adding or removing columns, can easily break pipelines if not detected early. Schema change monitors ensure that any modifications to the data structure are caught as soon as they occur—ideally at the point of data ingestion. This gives teams time to adjust downstream processes and avoid breaking transformations or reports.

Metrics monitoring

Metrics monitoring tracks key metrics like data freshness, volume, and completeness in real time. By setting thresholds for these metrics, data teams can receive alerts when values fall outside the expected range. This helps catch issues like missing data or delayed updates before they impact business operations.

Data tests

Data tests validate the integrity of your data by checking against predefined rules. These can include data type validation, range checks, or specific business rules. By running these tests upstream, teams can catch anomalies or invalid data at the earliest stages, preventing issues from affecting downstream systems.

Automating data quality checks

Integrating data quality testing into your CI/CD pipeline is essential for automating data quality checks. With CI/CD, every change to the pipeline—whether it’s a new data source, a transformation, or a schema update—can be automatically tested for quality, ensuring that no issues slip past inadequately created manual tests.

CI/CD for data pipelines

CI/CD integration enables continuous monitoring of data pipelines. Every time a change is made, automated tests can verify that data meets predefined quality standards. For example, if a schema change is detected, an alert can be sent immediately, allowing teams to address the issue before it causes downstream problems.

Tools that help

Tools like Datafold make it easy to set up automated data quality checks as they offer built-in tests for schema validation, data diffs, and anomaly detection. By automating these processes, data engineers can focus on higher-value tasks, confident that their pipelines are being monitored continuously for issues.

Data pipeline monitoring best practices

We recommend three best practices you can implement today to see immediate results. Teams can shift from reactive monitoring to a proactive, shift-left approach, ensuring data quality at every stage of the pipeline.

Real-time anomaly detection

Real-time anomaly detection is absolutely critical. By setting up real-time alerts for issues like data freshness, volume discrepancies, or schema changes, data teams can respond to problems as soon as they arise, preventing them from escalating. This ensures that issues are addressed before they can affect downstream users.

Version control & logging

Version control and logging should be incorporated into every data pipeline. Version-controlled transformations allow teams to track changes and roll back when necessary, ensuring that data integrity is maintained even as the pipeline evolves. Comprehensive logging enables fast root-cause analysis when issues occur, helping teams resolve problems quickly.

Automate…everything

If it sounds like we’ve talked about automation a bunch of times already, it’s because too often we come across legacy architectures that still rely on manual data validation and ad-hoc monitoring processes, leading to inconsistent and error-prone results. Manual checks not only slow down workflows but also increase the risk of human error, making it difficult to maintain data quality at scale.

Automating as much of the data quality testing as possible is key to building a resilient pipeline. Tools like data diff monitors, schema change detectors, and automated tests ensure that data quality is continuously validated without manual intervention, freeing up data engineers to focus on higher-level tasks like optimizing pipeline performance, designing new transformations, and improving data governance standards.

Upstream monitors = more resilient data pipelines

Data pipeline resilience depends on catching issues before they snowball. By moving data quality testing earlier in the pipeline, you can prevent small issues from becoming large-scale problems, build trust in your data, and enable faster, more accurate decision-making.

With automated tools, CI/CD integration, and a focus on upstream testing, proactive data monitoring is the key to keeping your pipelines healthy and your data reliable.

We can help. If you're interested in learning more about data observability tooling and how Datafold’s data diffing can help your team proactively detect bad code before it breaks production data:

- Book time with one of our solutions engineers to learn more about Datafold enables effective data observability through comprehensive monitoring, and which one(s) make sense for your specific data environment and infrastructure.

- If you're ready to get started, sign up for a 14 day free trial of Datafold to explore how data diffing can improve your data quality practices within, and beyond, data teams.

.avif)