The top data observability tools

Explore the leading data observability tools that integrate with the modern data stack to provide real-time monitoring, ensuring data quality, reliability, and scalable infrastructure management.

The best data observability tools provide teams with real-time insights into the health and reliability of their data systems, enabling them to detect and resolve issues proactively.

Why? Data observability has become increasingly important for organizations that rely on data-driven decision-making. As data pipelines grow in complexity and scale, it is essential to have full visibility into data quality, lineage, and pipeline performance to prevent downstream issues.

In this article, we will explore the top features of leading data observability platforms and how they can help improve your data quality and reliability.

Choosing the right tool

The modern data stack has transformed the way organizations manage and utilize data, with tools that enable greater flexibility, scalability, and performance. However, as data systems become more decentralized and complex, ensuring the health and quality of data across these diverse systems has become increasingly challenging.

This is where data observability tools play a crucial role—they provide a unified layer of monitoring that spans the entire data ecosystem, from ingestion to analysis. Integrating data observability into the modern data stack ensures that teams can confidently trust their data, regardless of the complexity or scale of their infrastructure.

Selecting the right data observability tool is a critical decision that can impact your entire data ecosystem. The tool you choose should align with your organization’s unique requirements, both in terms of functionality and scalability.

Whether you are focused on improving data quality, detecting anomalies, or ensuring pipeline performance, it’s essential to assess how well each tool fits within your existing infrastructure. By carefully evaluating the features that matter most to your team—such as real-time alerts, automation, and reporting—you can make an informed choice that helps your data operations run smoothly and efficiently.

Common challenges in data observability

Even with the best data observability tools, there are inherent challenges that teams may encounter. One common issue is alert fatigue—your data monitoring tool might be configured to generate too many alerts, making it difficult to distinguish between critical issues and non-urgent anomalies.

Another challenge is managing false positives, where alerts are triggered by temporary anomalies that don't represent real threats to data quality. Teams must also contend with scaling observability tools in complex, fast-growing data environments where new pipelines, tables, and schemas are constantly being added. Addressing these challenges requires fine-tuning alerts, regularly reviewing monitoring settings, and ensuring observability tools are optimized for scalability.

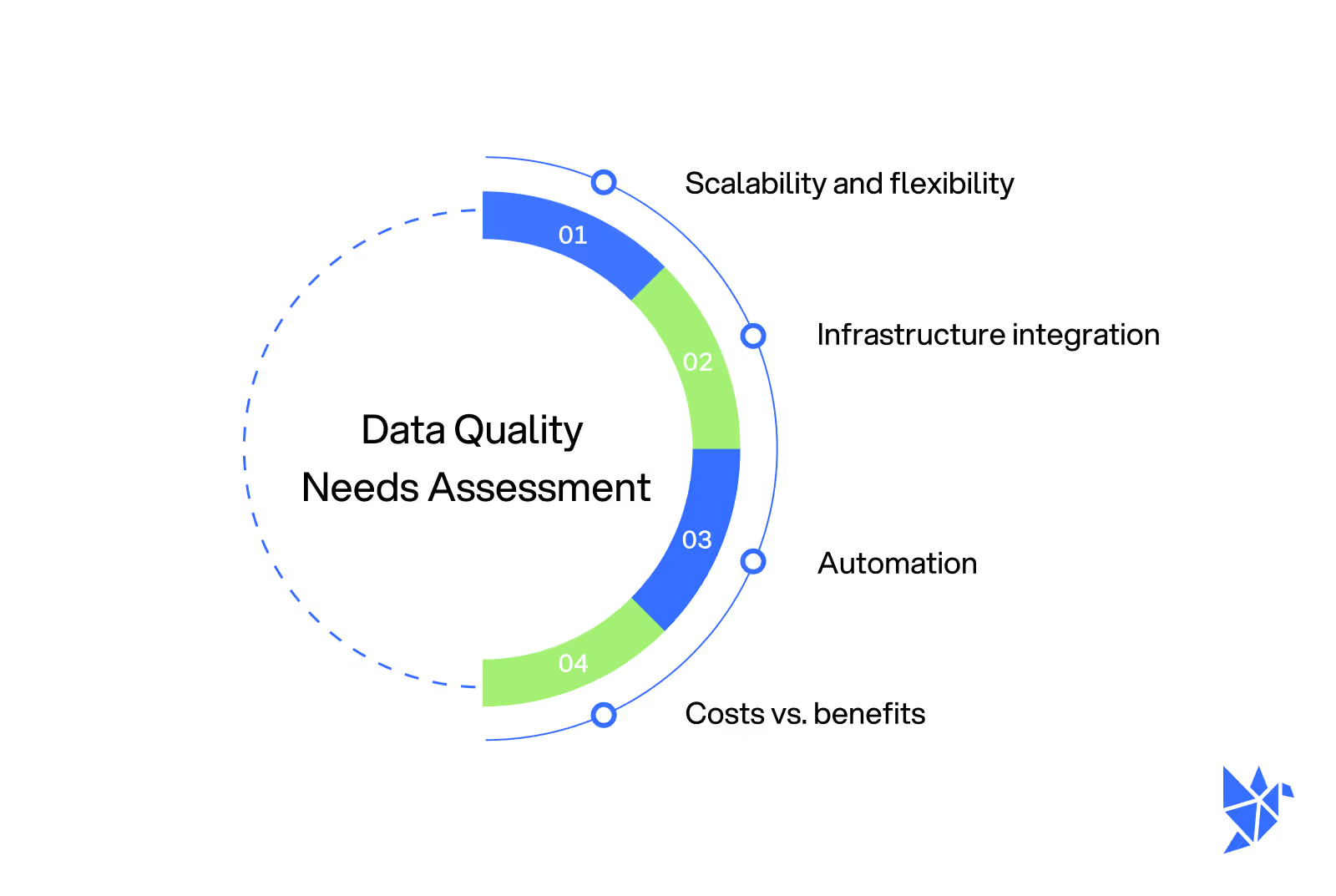

Assessing your data quality testing needs

Before selecting a data observability tool, it’s essential to evaluate the specific requirements of your data environment. Do you need more granular control over schema changes, or is your team focused on anomaly detection? By aligning your tool's features with your organization's pain points, you can choose a solution that best fits your needs.

Scalability and flexibility

As your data systems grow, your observability tool needs to scale with it. Look for tools that offer flexibility in managing large datasets and complex data environments. Ensure that the tool can handle increasing data volumes and integrate seamlessly with other systems as your infrastructure evolves.

Integration with existing infrastructure

When implementing a data observability tool, seamless integration with your current tech stack is crucial. Many organizations already rely on a mix of tools for data pipelines, storage, and processing, making it vital for a new observability tool to fit into these workflows without causing disruption.

Whether it’s integrating with orchestration platforms like Apache Airflow, data warehouses like Snowflake, or transformation tools like dbt, the right observability tool should enhance your existing setup without requiring a full overhaul. Look for tools that offer pre-built connectors and APIs to simplify this process.

Automation

Automation plays a significant role in making data observability efficient. Choose tools that offer robust automation features, such as automated quality checks and anomaly detection, which reduce the manual effort needed for monitoring.

Cost vs. benefits

Consider the return on investment when choosing a data observability tool. While some solutions may come with higher upfront costs, the time and resources saved through proactive monitoring and prevention of data quality issues often outweigh the initial investment. Evaluate pricing models carefully and look for features that align with your long-term goals.

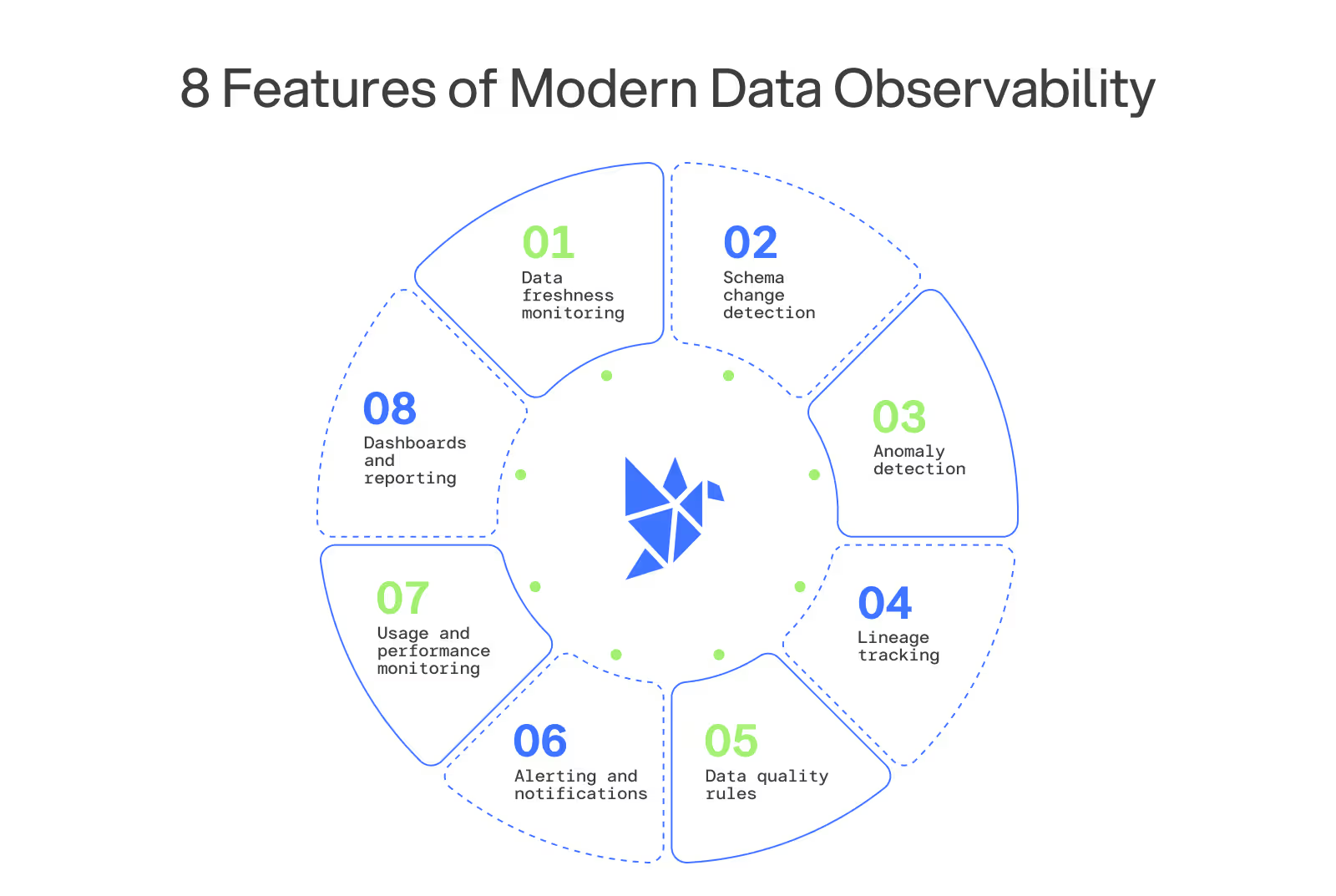

Modern data observability features

Data observability tools come with powerful features that make life easier for data teams. In the next section, we'll cover freshness monitoring, schema change detection, anomaly detection, lineage tracking, data quality rules, alerting and notifications, usage and performance monitoring, and dashboards and reporting.

Data freshness monitoring

Ensuring the timeliness of data is crucial for data observability. Data freshness monitoring allows teams to continuously check whether datasets are arriving on time and updated according to predefined schedules. This feature ensures that time-sensitive insights are generated from the latest data, preventing stale or outdated information from skewing analytics.

In the case of delays or lags, notifications can be sent to relevant teams, enabling them to troubleshoot pipeline issues before they affect business decisions. Freshness monitoring also offers historical trends, helping teams pinpoint recurring delays and optimize pipeline performance. This level of visibility ensures that data-dependent operations such as dashboards, models, and reports can confidently rely on current and accurate information.

Without real-time freshness tracking, companies risk using outdated data, which can lead to poor business outcomes and loss of trust in the data systems.

Schema change detection

Schema changes can be disruptive, especially in environments where downstream processes depend on a specific structure of data. A key feature in data observability tools is schema change detection, which alerts teams as soon as modifications are made to table structures, column types, or schema configurations.

This helps prevent breaking changes from reaching production by offering early warnings and detailed change logs. Instead of discovering broken pipelines after a deployment, teams can proactively fix any incompatibilities or update their systems to accommodate the changes. With automated detection, the tool continuously monitors for alterations, whether intentional or accidental, and provides notifications to ensure that the data's integrity remains intact.

Schema change detection is particularly valuable in complex environments where multiple teams might be updating or modifying tables. It helps reduce the likelihood of unnoticed changes disrupting production systems or triggering unexpected errors.

Anomaly detection

Data anomalies are deviations from expected patterns and can indicate significant issues such as data corruption, unauthorized changes, or pipeline failures. Anomaly detection in data observability tools uses machine learning algorithms to continuously monitor datasets and identify irregularities based on historical patterns. This feature provides early detection of problems such as unexpected spikes, dips, or missing data that might go unnoticed until they impact critical systems or business decisions.

Anomaly detection not only flags the anomalies but also often provides contextual information to help teams understand their potential causes. By catching data issues before they escalate, this feature helps prevent bad data from propagating through systems and ensures that pipelines remain healthy and reliable. It is particularly useful in large-scale environments with complex data dependencies, where manual monitoring would be time-consuming and ineffective.

Lineage tracking

Data lineage tracking gives teams visibility into how data flows from its origin to its final destination within the pipeline. This is vital for understanding the relationships and dependencies between various datasets and systems.

With lineage tracking, teams can trace the journey of data across multiple stages—identifying where it originated, how it was transformed, and where it is currently being used. This transparency helps teams quickly diagnose the root cause of data quality issues and fix them with minimal disruption.

Lineage tracking also plays a key role in governance, as it enables data teams to understand how changes in one part of the pipeline may affect other parts. For example, if an upstream table is modified or delayed, lineage tracking can highlight which downstream processes are at risk of being affected. This insight is crucial for maintaining data integrity and minimizing operational risks.

Data quality rules

One of the most essential features in data observability tools is the ability to enforce data quality rules. These rules allow teams to define standards and thresholds for acceptable data across pipelines. Whether it's setting constraints on data types, acceptable ranges for numeric values, or the format of strings, data quality rules help prevent incorrect or incomplete data from entering downstream processes.

By automating validation checks based on these rules, data observability tools provide a first line of defense against data quality issues. Teams can customize rules to fit specific use cases, ensuring that data meets both internal and external compliance standards.

The ability to track compliance with these rules over time also offers valuable insights into recurring issues or areas where pipelines need optimization. Regular monitoring against these rules prevents problems from reaching production and ensures that business decisions are made using accurate and reliable data.

Alerting and notifications

Alerting and notifications are essential for ensuring that data teams are aware of potential issues as soon as they occur. Data observability tools include configurable alerting systems that notify teams based on customizable thresholds, such as delays in data arrival, schema changes, or data anomalies.

Alerts can be routed to different teams depending on the type or severity of the issue, ensuring that the right people are informed at the right time. By integrating with common communication tools such as Slack, email, or incident management platforms, observability tools ensure that alerts are actionable and timely.

Configuring alerts with different levels of priority helps avoid alert fatigue, where too many notifications lead to critical issues being overlooked. This feature allows teams to take swift action to address data problems before they affect business operations, improving response times and reducing the impact of data incidents.

Usage and performance monitoring

Monitoring the performance and usage of data pipelines ensures that data systems are running efficiently and that resources are optimized. Performance monitoring tracks key metrics such as processing times, throughput, and resource utilization, allowing teams to identify and address bottlenecks. This ensures that pipelines can handle increasing data volumes and operate smoothly even during peak loads.

Usage monitoring provides insights into which datasets or processes are used most frequently and how they impact system performance. This information is useful for capacity planning, resource allocation, and optimizing costs.

By combining performance and usage monitoring, data observability tools enable teams to understand how well their data systems are functioning and where improvements can be made. Proactive monitoring also helps avoid issues like slow queries, system crashes, or unnecessary resource consumption, ensuring that data flows seamlessly throughout the organization.

Dashboards and reporting

Dashboards provide a centralized view of the health and status of data pipelines, offering teams a real-time overview of critical metrics. With customizable dashboards, data observability tools allow users to track key indicators such as data freshness, schema changes, and performance anomalies at a glance. These visual representations make it easy to spot trends, identify potential issues, and monitor the overall health of data systems.

Reporting features complement dashboards by allowing teams to generate detailed summaries of pipeline performance and data quality over time. These reports are invaluable for sharing insights with stakeholders or auditing the health of data systems.

By providing both real-time and historical views, dashboards and reporting tools empower data teams to make informed decisions and stay proactive about data observability. This ensures that issues are identified and resolved before they impact business outcomes.

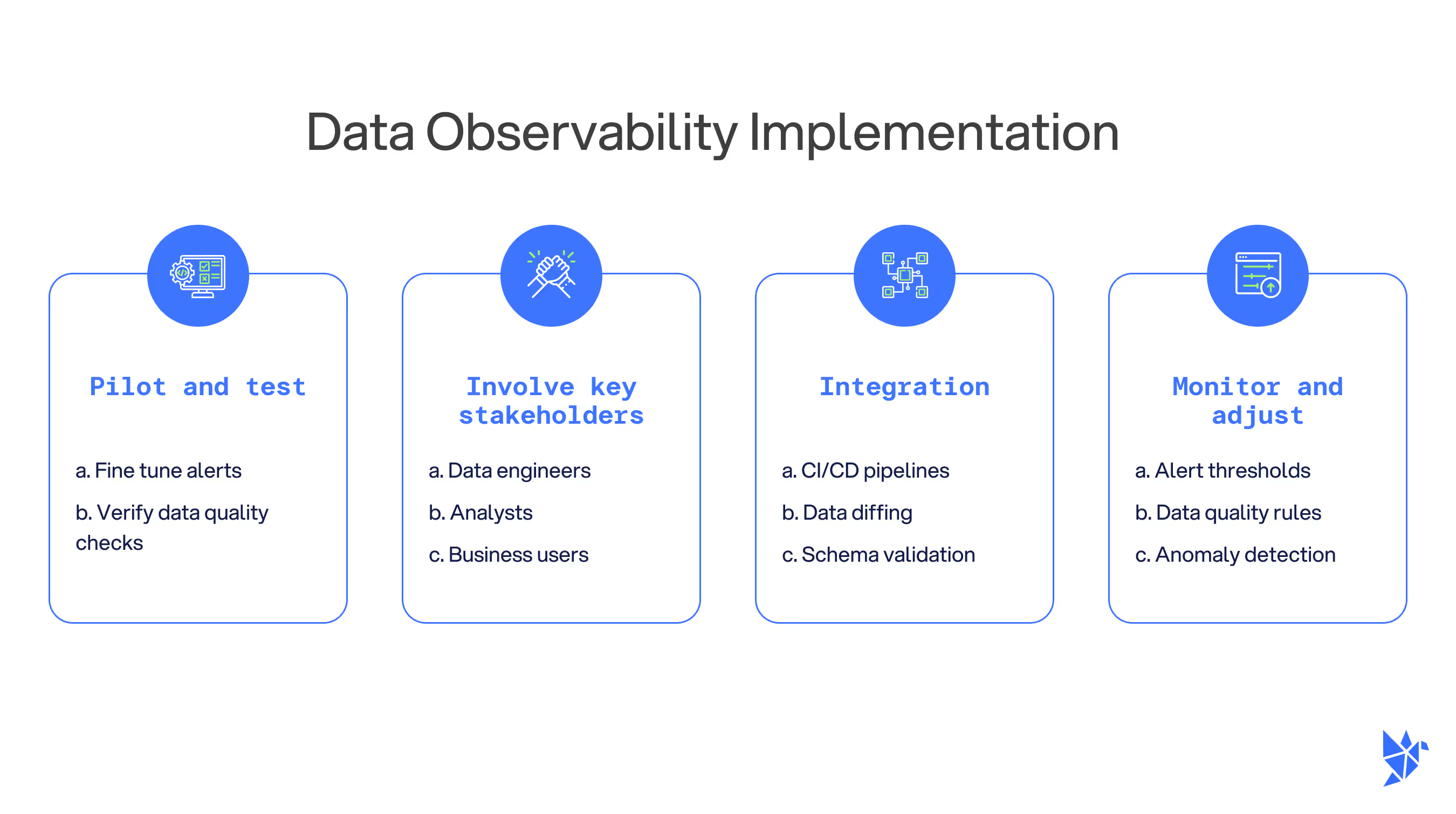

Implementing data observability tools

Implementing a data observability tool can seem overwhelming, but taking a phased approach can help ensure success. It’s important to begin with a clear understanding of your organization’s specific data needs and pain points, ensuring the tool you choose aligns with your goals. Following a structured implementation plan can help maximize the tool's impact and ensure long-term success in improving data reliability and performance.

Pilot and test

Once you’ve selected the right tool, it’s important to run a pilot to test its functionality in your environment. This ensures that the tool integrates well with your existing pipelines and addresses your specific data observability needs. Use the pilot phase to fine-tune alert settings and verify data quality checks.

Involve key stakeholders

Successful implementation of a data observability tool requires buy-in from key stakeholders, including data engineers, analysts, and even business users. Ensuring everyone understands the benefits of the tool can lead to better adoption and more efficient problem resolution across teams.

Integrate into CI/CD pipelines

In modern data engineering, continuous integration and continuous delivery (CI/CD) practices are essential for streamlining data operations. Data observability tools should integrate into CI/CD pipelines, ensuring that data quality checks happen early and often. This helps prevent bad data from being deployed into production, reducing the need for reactive fixes.

Tools that offer data diffing capabilities or schema validation in CI/CD pipelines add another layer of protection, ensuring that code changes don’t break downstream processes or introduce data integrity issues.

Monitor and adjust

Data observability is an ongoing process. Continuously monitor the effectiveness of your tool, adjusting alert thresholds, quality rules, and anomaly detection parameters as needed. Regular audits of your pipelines can help identify areas for improvement and ensure that your data observability practices stay up to date with evolving requirements.

Getting started with Datafold

For teams looking to improve their data quality practices, Datafold offers powerful data observability features such as data diffing and comprehensive monitoring. Start with a free 14-day trial to explore how Datafold can help your team catch issues early, optimize your pipelines, and ensure the reliability of your data systems.

If you're interested in learning more about data observability tooling and how Datafold’s data diffing can help your team proactively detect bad code before it breaks production data:

- Book time with one of our solutions engineers to learn more about Datafold enables effective data observability through comprehensive monitoring, and which one(s) make sense for your specific data environment and infrastructure.

- If you're ready to get started, sign up for a 14 day free trial of Datafold to explore how data diffing can improve your data quality practices within, and beyond, data teams.

.avif)